[DeepLook] 4. 모델 선정 및 학습

사진 전처리 이후, 모델을 선정하고 학습시키는 과정을 거쳤다.

모델의 후보는 ResNet, EfficientNet, Arcface가 있었는데, 가장 얼굴 유사도 부분에서 큰 성능을 보이는 Arcface 모델을 선정했다.

더 자세한 과정은 코랩을 통해 확인할 수 있다.

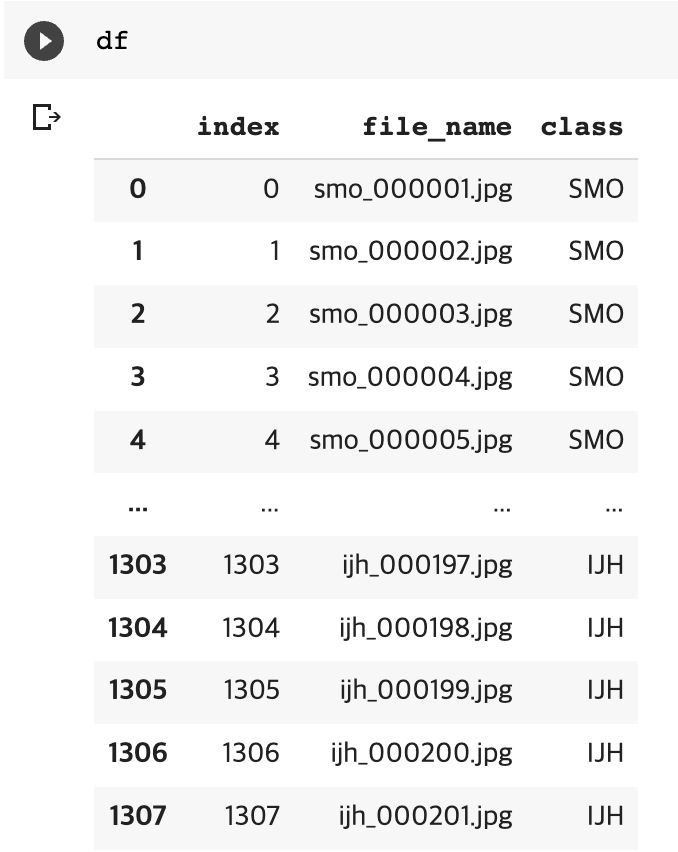

1. CSV 파일 생성

우선 전처리된 사진들의 이름(이름_순번 형태)과 해당 인물들이 label 된 csv 파일을 생성했다.

2. Train, Test dataset 분리

항목별로 70%는 train, 30%는 test의 데이터셋으로 분리했다.

# 이니셜을 하나의 배열로 모으기

class_name_list = []

tmp=df.copy()

for initial in tmp['class']:

if initial not in class_name_list:

class_name_list.append(initial)

print(class_name_list) #['SMO', 'CHJ', 'CDE', 'HAR', 'JJJ', 'JSI', 'OJY', 'SHE', 'SHG', 'IDH', 'IJH']

# train, valid 별 dataframe 생성

train = pd.DataFrame(columns=tmp.columns)

valid = pd.DataFrame(columns=tmp.columns)

# train_test_split 함수를 이용하여 30%는 test, 70%는 train으로 분리

for class_name in class_name_list:

tmp_with_class = tmp.loc[tmp['class'] == class_name]

train_tmp, valid_tmp = train_test_split(tmp_with_class, test_size = 0.3, random_state = 42)

train_tmp['class'] = class_name

valid_tmp['class'] = class_name

train = pd.concat([train, train_tmp])

valid = pd.concat([valid, valid_tmp])이렇게 train 이라는 변수에는 각 이니셜에 해당하는 데이터의 70%가,

test라는 변수에는 각 이니셜에 해당하는 데이터의 30%가 할당되었다.

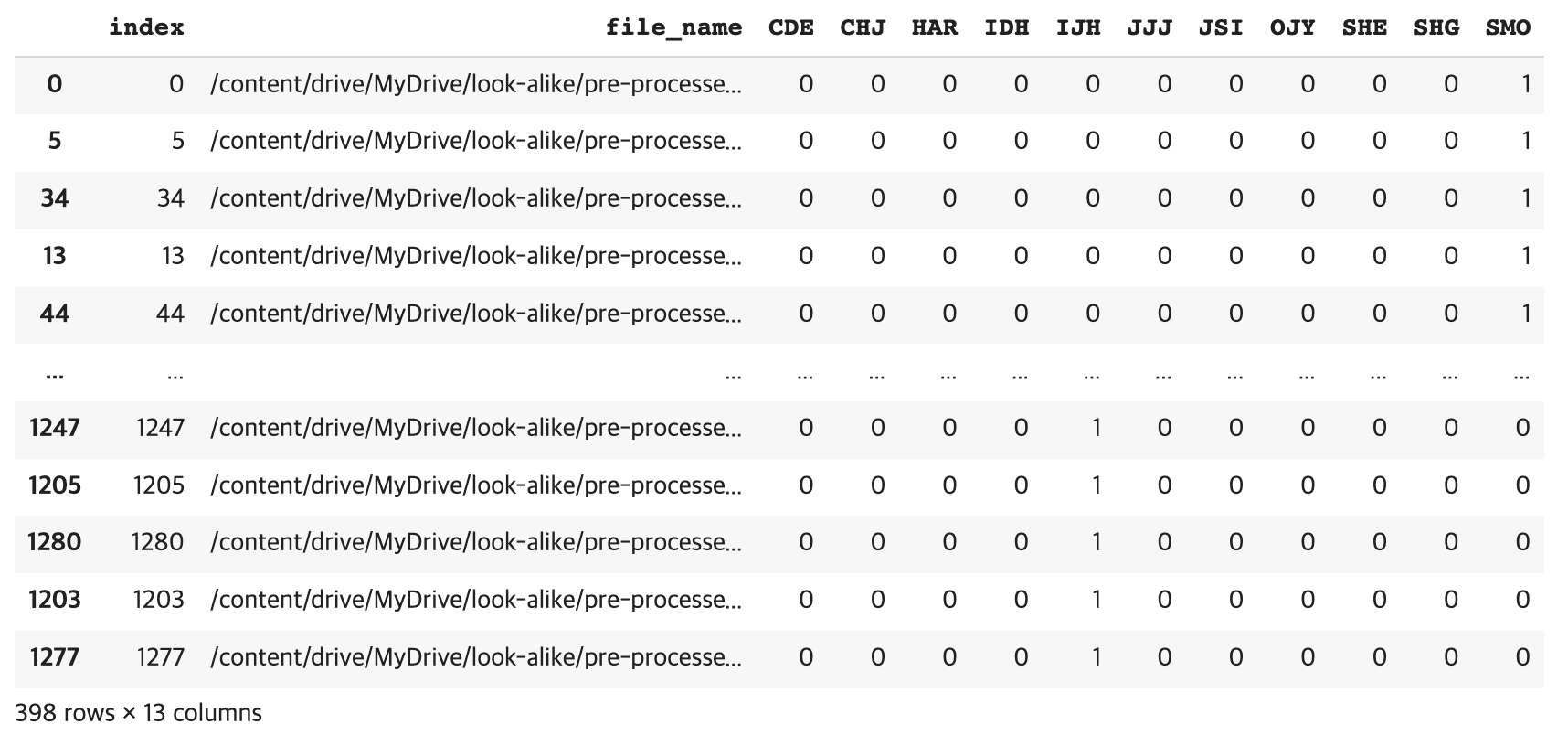

3. One-hot encoding 적용

one_hot_encoded = pd.get_dummies(tmp['class'])

train_one_hot_encoded = pd.get_dummies(train['class'])

valid_one_hot_encoded = pd.get_dummies(valid['class'])

data = pd.concat([tmp, one_hot_encoded], axis=1)

data = data.drop(['class'], axis=1)

train = pd.concat([train, train_one_hot_encoded], axis=1)

train = train.drop(['class'], axis=1)

valid = pd.concat([valid, valid_one_hot_encoded], axis=1)

valid = valid.drop(['class'], axis=1)

valid이후, 각 이니셜에 대해 one-hot encoding을 적용했더니 결과가 다음과 같이 나왔다.

4. CustomDataset

우선 각각의 데이터에 albumentations 라이브러리를 사용하여 data augmentation을 진행했다.

train_transform = A.Compose([

A.Resize(224, 224),

A.HorizontalFlip(p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.RandomBrightnessContrast(brightness_limit=(-0.3, 0.3), contrast_limit=(-0.3, 0.3), p=1),

A.ChannelShuffle(p=0.2),

A.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

ToTensorV2()

])

valid_transform = A.Compose([

A.Resize(224, 224),

A.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

ToTensorV2()

])이때 train과 valid를 다르게 증강시킨 이유는 대표적으로 과적합(Overfitting)을 방지하기 위해서이다.

전체 데이터셋을 사용하면 모델이 데이터를 외우는 현상인 과적합이 발생할 가능성이 높아진다.

따라서 모델이 일반화할 수 있는 능력을 향상하기 위해서는 일부 데이터를 떼어내어 검증(validation)에 사용하는 것이 좋다.

이후, 다음과 같이 CustomDataset을 정의했다.

class CustomDataset(Dataset):

def __init__(self, file_list, label_list, transform=None):

self.file_list = file_list

self.label_list = label_list

self.transform = transform

def __len__(self):

return len(self.file_list)

def __getitem__(self, index):

image = cv2.imread(self.file_list[index])

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # BGR -> RGB

if self.transform:

transformed = self.transform(image=image, force_apply=False)

image = transformed["image"]

label = self.label_list[index]

return image, label작성한 CustomDataset을 이용해서 Dataloader를 적용했다.

from torch.utils.data import DataLoader

# 파일 이름 및 레이블 목록 추출

train_files = train["file_name"].tolist()

train_labels = train.drop(["file_name", "index"], axis=1).values

valid_files = valid["file_name"].tolist()

valid_labels = valid.drop(["file_name", "index"], axis=1).values

# CustomDataset 정의

train_dataset = CustomDataset(train_files, train_labels, transform=train_transform)

valid_dataset = CustomDataset(valid_files, valid_labels, transform=valid_transform)

# DataLoader 정의

batch_size = 16

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

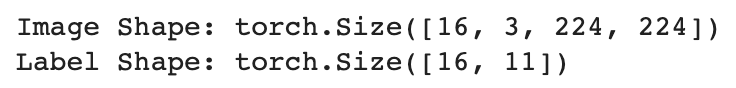

valid_loader = DataLoader(valid_dataset, batch_size=batch_size, shuffle=True)다음 결과를 확인해보자.

for x,y in valid_loader:

print(f'Image Shape: {x.shape}')

print(f'Label Shape: {y.shape}')

break

이미지의 shape이 의도했던 대로 224 * 224로 나오고 (3은 RGB를 뜻한다.), label도 이니셜 개수인 11개로 잘 나온 것을 볼 수 있다.

5. Model 정의

너무 길어서 자세한 arcface 모델에 관해서는 다음 블로그에서 정리하기로 하고, 작성한 코드만 첨부해 보도록 하겠다.

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

import math

class ArcMarginProduct(nn.Module):

def __init__(self, in_features, out_features, scale=30.0, margin=0.50, easy_margin=False, device='cuda'):

super(ArcMarginProduct, self).__init__()

self.in_features = in_features

self.out_features = out_features

self.scale = scale

self.margin = margin

self.device = device

self.weight = nn.Parameter(torch.FloatTensor(out_features, in_features))

nn.init.xavier_uniform_(self.weight)

self.easy_margin = easy_margin

self.cos_m = math.cos(margin)

self.sin_m = math.sin(margin)

self.th = math.cos(math.pi - margin)

self.mm = math.sin(math.pi - margin) * margin

def forward(self, input, label):

cosine = F.linear(F.normalize(input), F.normalize(self.weight))

sine = torch.sqrt(1.0 - torch.pow(cosine, 2))

phi = cosine * self.cos_m - sine * self.sin_m

if self.easy_margin:

phi = torch.where(cosine > 0, phi, cosine)

else:

phi = torch.where(cosine > self.th, phi, cosine - self.mm)

one_hot = torch.zeros(cosine.size(), device=input.device)

one_hot.scatter_(1, label.view(-1, 1).long(), 1)

output = (one_hot * phi) + ((1.0 - one_hot) * cosine)

output *= self.scale

return output

class CustomArcFaceModel(nn.Module):

def __init__(self, num_classes, device='cuda'):

super(CustomArcFaceModel, self).__init__()

self.device = device

self.backbone = nn.Sequential(*list(models.resnet50(pretrained=True).children())[:-1])

self.arc_margin_product = ArcMarginProduct(2048, num_classes, device=self.device)

nn.init.kaiming_normal_(self.arc_margin_product.weight)

def forward(self, x, labels=None):

features = self.backbone(x)

features = F.normalize(features)

features = features.view(features.size(0), -1)

if labels is not None:

logits = self.arc_margin_product(features, labels)

return logits

return features

def cosine_similarity(self, x1, x2):

return torch.dot(x1, x2) / (torch.norm(x1) * torch.norm(x2))

def find_most_similar_celebrity(self, user_face_embedding, celebrity_face_embeddings):

max_similarity = -1

most_similar_celebrity_index = -1

for i, celebrity_embedding in enumerate(celebrity_face_embeddings):

similarity = self.cosine_similarity(user_face_embedding, celebrity_embedding)

if similarity > max_similarity:

max_similarity = similarity

most_similar_celebrity_index = i

return most_similar_celebrity_index, max_similarity이미지가 입력됐을 때, 위 코드를 거침으로 다음과 같은 과정이 일어난다:

- 입력 이미지를 CustomArcFaceModel의 forward 메서드에 전달한다.

- 입력 이미지는 self.backbone으로 지정된 백본 모델(여기서는 ResNet-50)을 통과한다.

- 백본 모델은 이미지를 특징 맵(feature map)으로 변환한다.

- 특징 맵은 정규화(normalization)된다.

- 정규화된 특징 맵은 벡터로 펼쳐진다.

- 펼쳐진 특징 벡터는 self.arc_margin_product인 ArcMarginProduct 레이어를 통과한다.

- ArcMarginProduct는 입력 벡터와 레이블을 받아서, cosine 유사도를 계산하고 ArcFace 손실 함수를 적용하여 로짓(logits)을 계산한다.

- 계산된 로짓은 반환된다.

따라서, CustomArcFaceModel 클래스를 사용하면 입력 이미지가 백본 모델인 ResNet-50을 통과하여 특징 벡터로 변환되고, 이 특징 벡터는 ArcMarginProduct 레이어를 통해 로짓으로 변환된다.

6. 모델 train

train 시, epoch마다 출력값이 나오도록 코드를 작성했다.

from tqdm import tqdm

def train(model, optimizer, criterion, train_loader, valid_loader, device, epochs):

model.to(device)

best_accuracy = 0.0

for epoch in range(epochs):

model.train()

train_loss = 0.0

train_corrects = 0

for x, y in tqdm(train_loader, desc=f"Epoch {epoch + 1} - Training"):

x = x.to(device)

# y = y.to(device)

y = torch.argmax(y, dim=1).to(device)

optimizer.zero_grad()

output = model(x, y)

loss = criterion(output, y)

loss.backward()

optimizer.step()

_, preds = torch.max(output, 1)

train_loss += loss.item() * x.size(0)

train_corrects += torch.sum(torch.eq(torch.round(preds), y.data)).float()

train_loss = train_loss / len(train_loader.dataset)

train_accuracy = train_corrects.double() / len(train_loader.dataset)

model.eval()

valid_loss = 0.0

valid_corrects = 0

with torch.no_grad():

for x, y in tqdm(valid_loader, desc=f"Epoch {epoch + 1} - Validation"):

x = x.to(device)

# y = y.to(device)

y = torch.argmax(y, dim=1).to(device)

output = model(x, y)

loss = criterion(output, y)

_, preds = torch.max(output, 1)

valid_loss += loss.item() * x.size(0)

valid_corrects += torch.sum(torch.eq(torch.round(preds), y.data)).float()

valid_loss = valid_loss / len(valid_loader.dataset)

valid_accuracy = valid_corrects.double() / len(valid_loader.dataset)

print(f"Epoch {epoch + 1}/{epochs} - Train Loss: {train_loss:.4f}, Train Acc: {train_accuracy:.4f}, Valid Loss: {valid_loss:.4f}, Valid Acc: {valid_accuracy:.4f}")

if valid_accuracy > best_accuracy:

best_accuracy = valid_accuracy

torch.save(model.state_dict(), "arcface.pth")

return model# 하이퍼파라미터 설정

num_classes = 11 # 분류할 클래스의 수 (CDE, CHJ, HAR, IDH, IJH, JJJ, JSI, OJY, SHE, SHG, SMO)

embedding_size = 2048

learning_rate = CFG['LEARNING_RATE']

epochs = 150

# 모델 생성

model = CustomArcFaceModel(num_classes)

# 옵티마이저 및 손실 함수 설정

optimizer = torch.optim.Adam(model.parameters(), lr = learning_rate)

criterion = nn.CrossEntropyLoss()

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='max', factor=0.5, patience=2,threshold_mode='abs',min_lr=1e-8, verbose=True)

# 모델 학습

trained_model = train(model, optimizer, criterion, train_loader, valid_loader, device, epochs)이후, 하이퍼파라미터를 할당하여 모델을 학습시켰더니, 다음과 같이 최종 결과가 나왔다.

결과를 보면, train accuracy는 높은 것에 비해 valid accuracy가 낮은 것을 볼 수 있다. 이는 과적합 현상으로 판단할 수 있다.

하이퍼파라미터를 조금 더 조정하여 train 시켜볼 예정이다.