[토이프로젝트-공감 챗봇] 프로젝트 개요

이번에 NLP를 주제로 작은 토이 프로젝트를 진행했는데, 자신의 심리를 작성하면 그것에 공감해 주거나 상담해 주는 챗봇을 만들어봤다.

참고자료:

본 프로젝트는 skt의 생성 모델, KoGPT2를 fine-tuning 하여 사용했으며,

데이터셋은 유명한 송영숙 님의 챗봇 데이터셋을 사용했다.

자세한 코드는 깃허브를 참고하면 좋을 것 같다.

1. 데이터 전처리

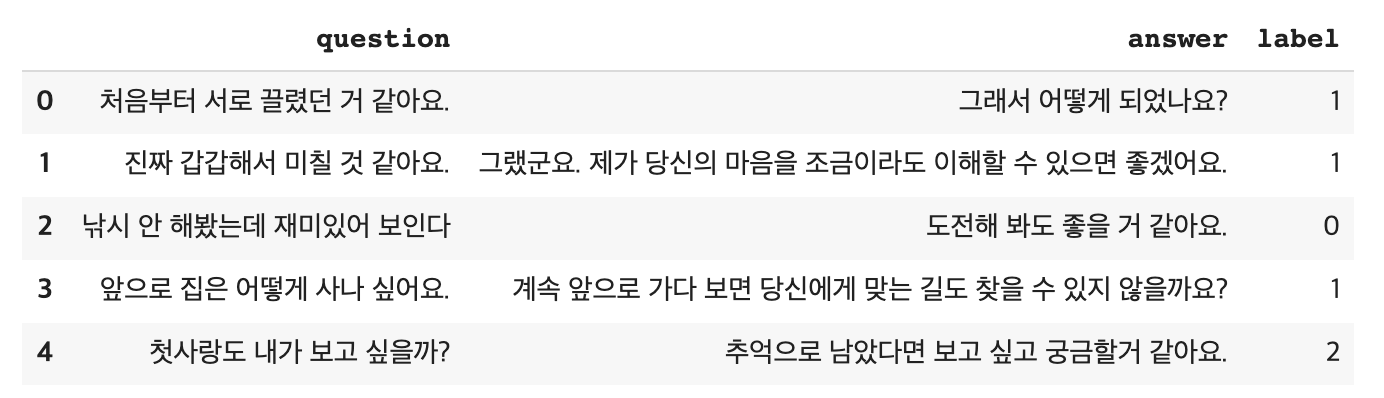

우선 사용한 데이터를 df에 저장하고 df.head()를 실행해 보면 다음과 같다:

여기서 label이 있는데, 0은 일상다반사, 1은 이별(부정 감정), 2는 사랑(긍정 감정)이다.

학습을 시켜보고 회고해 보는데, 이 label을 넣어서 학습시켰으면 훨씬 좋았을 것 같다. (좋았다기보다는 답변을 평가할 때 지표로 평가하기 좋았을 것 같다.)

하지만, 처음의 나는 챗봇에 텍스트를 넣었을 때 answer을 받는 것을 목표로 해서 label을 없앤 채 학습시켰다.

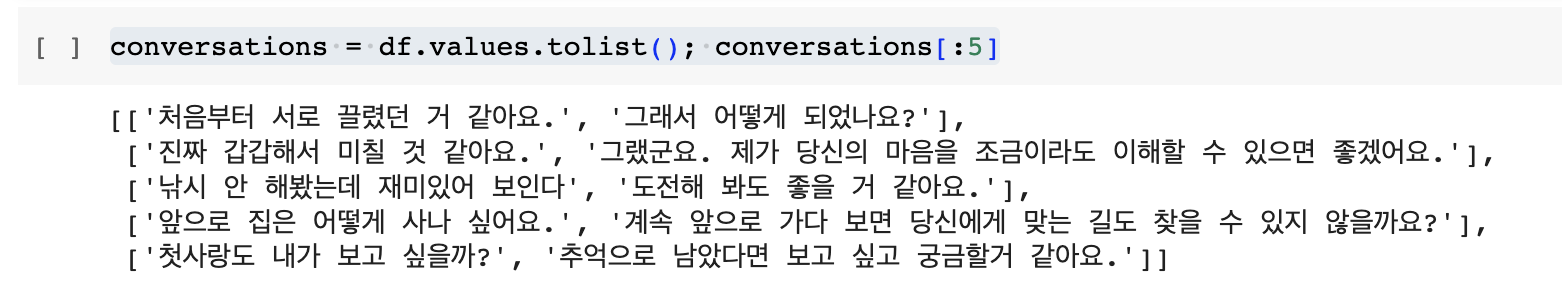

이후, conversations라는 변수에 리스트로 저장했다.

2. Tokenizing

Tokenizing의 원리

위 데이터셋(텍스트)을 컴퓨터가 이해하기에는 분명 한계가 있다.

그러므로, 해당 텍스트를 어떤 식으로 분리해서, 분리된 텍스트를 특정한 숫자(id)에 대응시키고, 해당 id를 모델의 입력으로 넣어주는 과정이 필요하다.

이때, 입력으로 들어온 텍스트를 조금 더 작은 단위로 분리하는 과정은 대표적으로 3가지가 있다:

- word-based

- character-based

- subword-based

각각에 관해 간단하게 설명하자면, word-based는 단순하게 단어 단위로 분리하고, 각 단어별로 고유의 id 값을 부여하는 방식,

character-based는 모든 텍스트를 character 단위로 자르고, 각 character마다 고유의 id를 부여하는 방식,

subword-based는 자주 사용되는 단어들은 그냥 사용하고, 자주 등장하지 않는 단어들은 의미 있는 subword로 분리하는 방식이다.

여기서 내가 사용한 PreTrainedTokenizerFast라는 tokenizer는 subword-based 방식을 사용한다.

Tokenizing 방식

tokenizer에서는 텍스트의 시작, 끝, 미지의 토큰, 패딩, 마스크, 구분 등의 역할을 수행하는 bos_token, eos_token, unk_token, pad_token, mask_token, sep_token 등의 특수 토큰을 지정해 주었다.

BOS = '<bos>'

EOS = '<eos>'

MASK = '<unused0>'

PAD = '<pad>'

SEP = '<sep>'

tokenizer = PreTrainedTokenizerFast.from_pretrained(

"skt/kogpt2-base-v2",

bos_token=BOS,

eos_token=EOS,

unk_token='<unk>',

pad_token=PAD,

mask_token=MASK,

sep_token=SEP

)unk 토큰은 작성하고 생각나서 안이쁘지만 귀찮아서 문자열로 넣었다

각 토큰에 관한 간단한 설명은 다음과 같다:

- bos_token: 문장의 시작을 나타내는 토큰

- eos_token: 문장의 끝을 나타내는 토큰

- unk_token: 알 수 없는 단어를 나타내는 토큰

- pad_token: 패딩을 나타내는 토큰

- mask_token: 마스크를 나타내는 토큰

- sep_token: 구분을 나타내는 토큰

토큰 사용의 예를 들어보자.

만약 내가 '오늘 우울해서 파마했어'를 토크나이저를 통해 토큰화시키면 다음과 같이 토큰화된다:

['<bos>', '오늘', '우울_', '_해서', '파마_', '_했어', '<eos>']

여기서 만약 내가 사용한 토크나이저의 사전에 '파마'라는 단어가 없으면 다음과 같이 토큰화된다:

['<bos>', '오늘', '<unk', '_해서', '파마_', '_했어', '<eos>']

그렇다면, 이제 이 토큰들을 컴퓨터가 알아들을 수 있도록 숫자로 인코딩 시켜보자.

함수는 간단하다. tokenizer.encode(인코딩 시킬 문장)를 실행해 주면 된다.

그럼 위의 토큰들은 다음과 같이 인코딩 된다 (숫자는 임의의 숫자임):

[1, 8723, 1289, 7893, 4536, 1632, 0]

이렇게 tokenizer의 사전에 등록되어 index가 있는 경우는 해당 index로,

사용자가 token을 직접 넣어줘서 index가 없는 경우에는 새로 할당된 index로 인코딩 된다.

Tokenizing 적용

그럼 이 방법을 가공한 데이터셋에 이용해 보자. 코드는 다음과 같다:

class ChatDataset(Dataset):

def __init__(self, conversations, tokenizer, max_length=150):

self.tokenizer = tokenizer

self.inputs = []

self.labels = []

BOS_ID = tokenizer.bos_token_id

EOS_ID = tokenizer.eos_token_id

SEP_ID = tokenizer.sep_token_id

PAD_ID = tokenizer.pad_token_id

for conversation in conversations:

question, answer = conversation

# 토큰화

question_ids = tokenizer.encode(question)

answer_ids = tokenizer.encode(answer)

# [BOS] 질문 [SEP] 답변 [EOS] 형태로 만듬

input_ids = [BOS_ID] + question_ids + [SEP_ID] + answer_ids + [EOS_ID]

# 레이블 생성 (-100으로 패딩)

label_ids = [-100]*(len(question_ids)+2) + answer_ids + [-100]*1

# 패딩

while len(input_ids) < max_length:

input_ids.append(PAD_ID)

label_ids.append(-100)

self.inputs.append(input_ids)

self.labels.append(label_ids)

def __len__(self):

return len(self.inputs)

def __getitem__(self, idx):

return torch.tensor(self.inputs[idx]), torch.tensor(self.labels[idx])문맥을 잘 표현하기 위해 질문과 그에 대한 답변을 토큰화하여 [BOS] 질문 [SEP] 답변 [EOS] 형태로 만들어 주다. 이러한 방식으로 구분되게 인코딩함으로써 모델이 질문과 답변의 경계를 인식하고 질문에 대한 답변을 잘 생성할 수 있다.

또한 챗봇의 학습을 위해 label도 만들어야 했다. 우리의 목표는 질문에 맞는 답변을 생성하는 것이기 때문에, 답변 부분만 label로 사용했습니다.

이는 [-100]*(len(question_ids)+2) + answer_ids + [-100]*1 형식으로 인코딩했다. 이와 같이 [BOS] 질문 [SEP], [EOS] 부분을 [-100]으로 만든 이유는 답변 부분(answer_ids)만 추출하여 label로 만들기 위해서이다.

그렇다면, [-100]으로 인코딩하는 이유는 무엇일까?

CrossEntropyLoss와 같은 몇몇 PyTorch의 손실 함수에서는 -100을 특수한 값으로 사용하기 때문이다.

레이블이 -100인 경우, 해당 위치의 손실은 전체 손실 계산에서 무시된다. 즉, 그 위치의 예측 값이 어떤 것이든 간에 손실에는 영향을 주지 않는 것이다.

위에서 작성한 코드에서는 GPT-2 모델을 학습할 때, 질문 부분에 해당하는 위치와 패딩 부분의 손실은 계산되지 않도록 하기 위해 이러한 방법을 사용했다. 이렇게 하면, 모델은 답변 부분에 대해서만 손실을 최소화하는 방향으로 학습하게 된다.

3. 모델 학습

옵티마이저는 일반적으로 자연어 처리 및 딥러닝 작업에서 효과적으로 사용되는 옵티마이저 중 하나 AdamW를 사용했다.

AdamW는 모델의 가중치를 정규화하므로 모델의 가중치를 제어하면서도 최적화를 수행할 수 있다고 한다.

작성된 코드는 다음과 같다:

from tqdm import tqdm

# 훈련 루프

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

for epoch in range(10): # 에폭 수를 적절하게 조정해주세요.

total_loss = 0

for i, (inputs, labels) in enumerate(tqdm(dataloader)):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(input_ids=inputs, labels=labels)

loss = outputs.loss

total_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1}, Loss: {total_loss/(i+1)}")

# 모델 저장

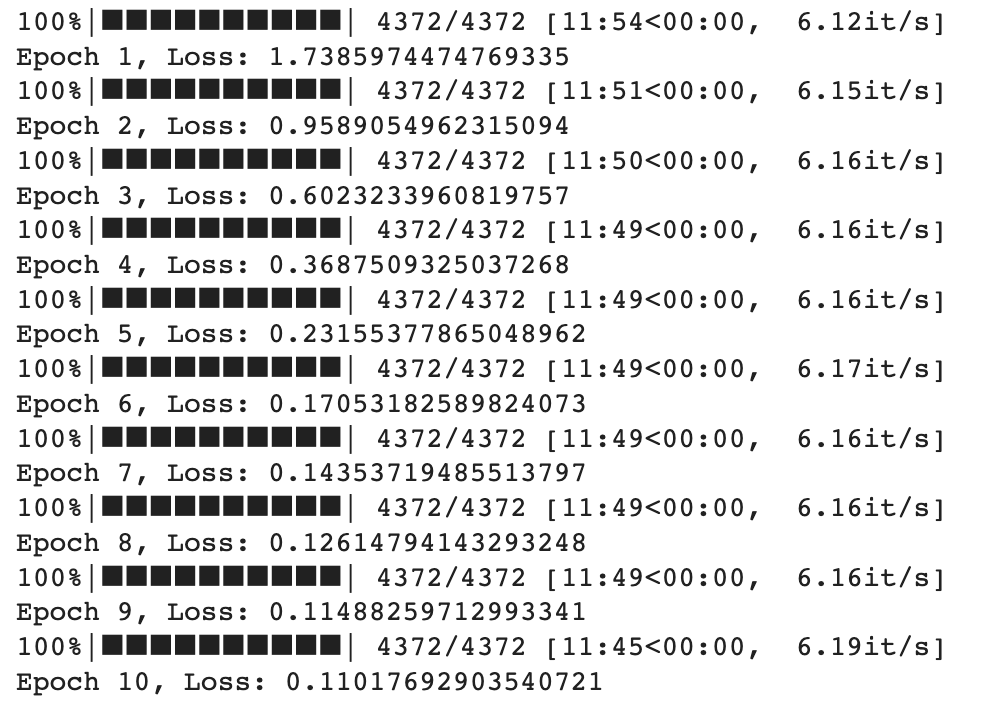

torch.save(model.state_dict(), "koGpt2_chatbot.pt")이렇게 실행하여 추출된 각 epoch 당 loss는 다음과 같다:

4. 답변 생성

마지막으로, 답변 생성은 다음과 같이 코드를 작성했다:

# 답변 생성

generated = model.generate(

inputs,

max_length=60,

temperature=0.2,

no_repeat_ngram_size=3,

pad_token_id=tokenizer.pad_token_id,

eos_token_id=tokenizer.eos_token_id,

bos_token_id=tokenizer.bos_token_id

)

generated_text = tokenizer.decode(generated[:, inputs.shape[-1]:][0], skip_special_tokens=True)- 생성 과정에서 샘플링의 다양성을 조절하는 역할을 하는 temperature는 0.2로 설정했다.

- temperature가 낮을수록 가장 가능성이 높은 단어를 선택하도록 하여 생성된 텍스트가 보다 일관되고 결정적인 특성을 지니고, 반대로 높을수록 무작위성을 도입하여 더 다양하고 창의적인 답변을 생성할 수 있다고 한다.

- 생성 과정에서 반복되는 n-gram을 방지하는 역할을 하는 no_repeat_ngram_size는 3으로 지정해 주었다.

5. 결과

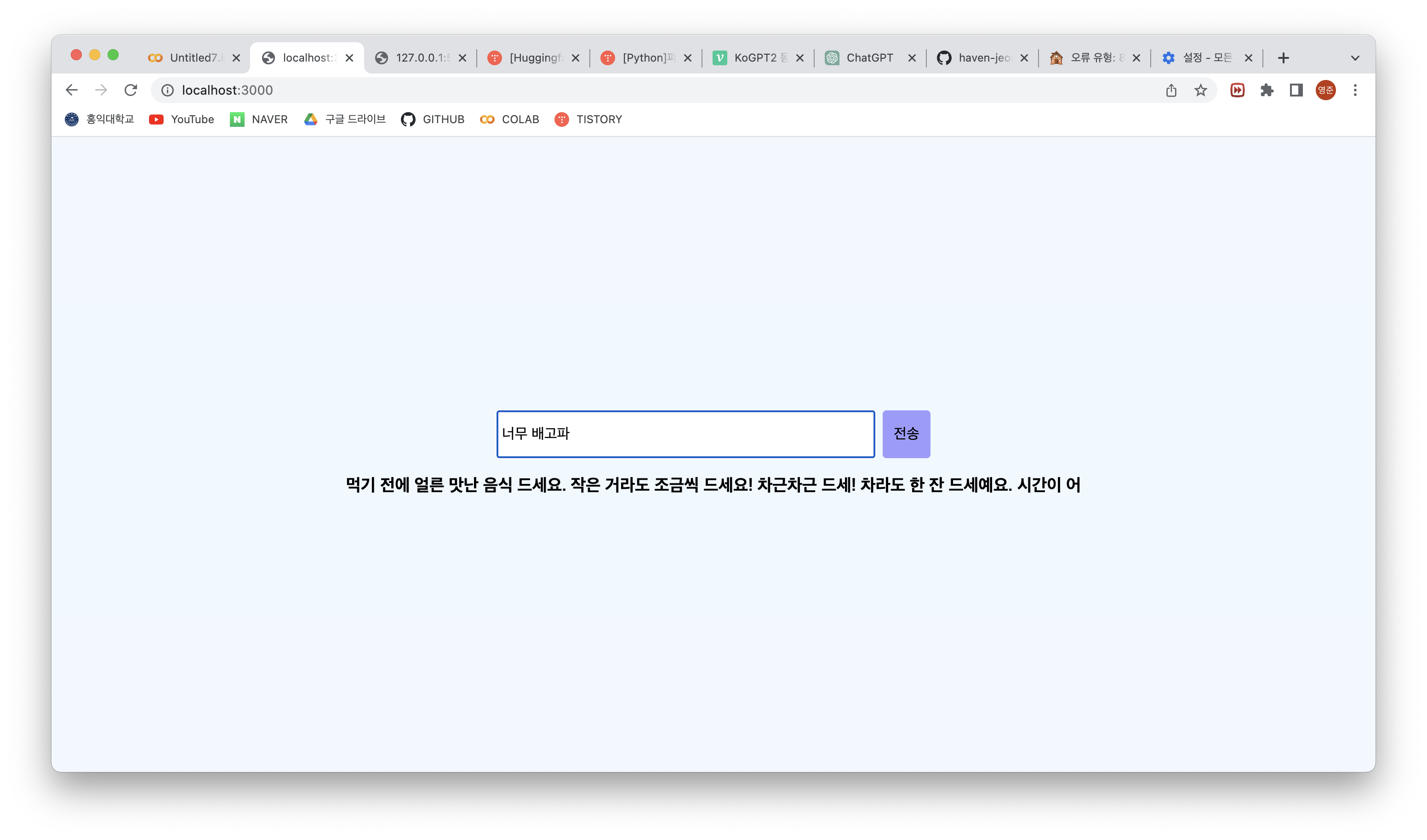

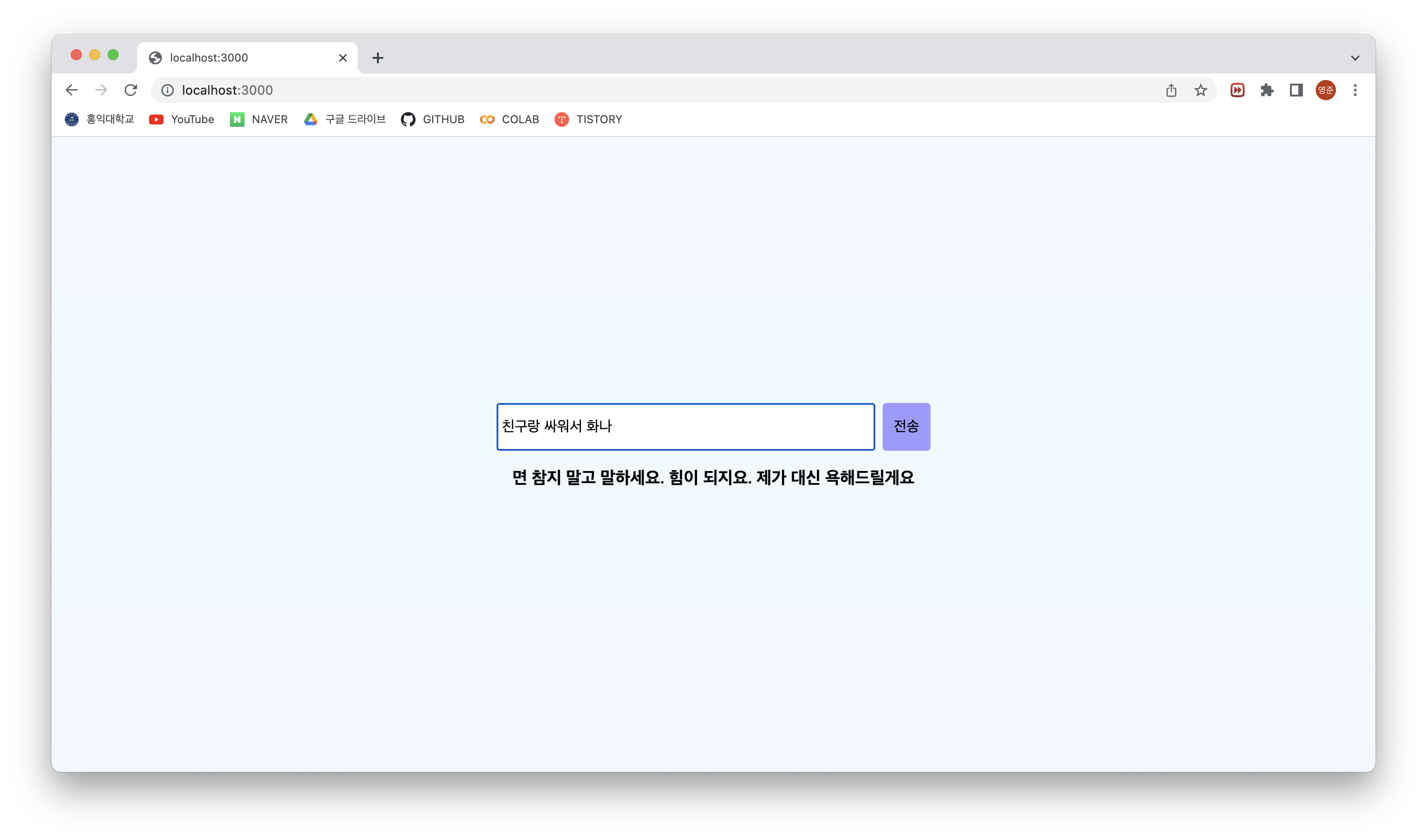

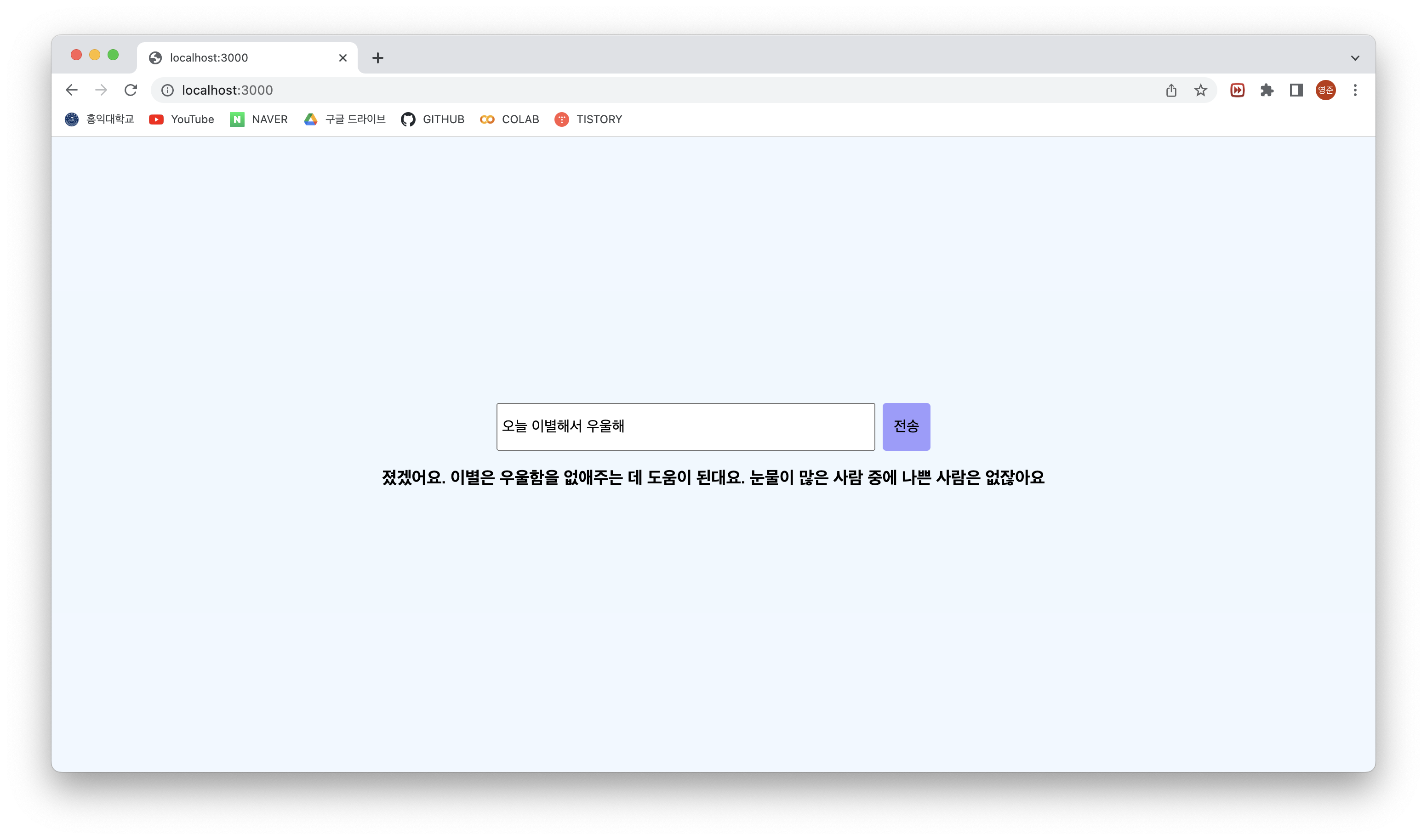

이후, 간단하게 프론트와 백을 구성하고 모델을 연결시켜 봤다. 확실히 한번 해본 지라, 30분 만에 프론트, 백을 구성했다.

결과는 다음과 같다 (사진 첨부):

처음에는 괜찮았는데, 다양한 질문을 하니까 답변이 이상해진다. epoch을 더 늘려서 학습해 봐야겠다.

그리고, 답변의 첫 부분은 항상 이상하게 나오는데, 내가 작성한 텍스트에서 이어서 작성하려고 해서 그런가..??

그 이유를 잘 모르겠지만 고쳐봐야겠다...

하루 만에 데이터를 간단하게 가공하고 학습하는 코드를 작성하여 학습시켜 보고, 결과물을 내봤다.

조금 빨리 해야 해서 정교하게 작업은 못 했지만, 다음에는 좀 더 많은 데이터셋을 감정 label을 포함시켜 작업해보고 싶다 !!