[CS224N] 2. Neural Classifiers

CS224N 2번째 강의를 수강하고 정리 및 지식 공유를 위해 블로그를 쓴다. 참고로 본인은 2021 Winter 버전을 수강했다.

Review: Main idea of Word2Vec & Negative Sampling

지난번 블로그에서 Word2Vec에 관해 정리했다. 간단하게 복기해 보자.

우선 Word2Vec에는 CBOW, Skip-gram이라는 두 가지 알고리즘이 쓰인다.

1. CBOW

맥락 벡터가 입력, 중심 벡터가 출력인 경우를 CBOW 알고리즘이라고 한다. CBOW의 전체적인 구조는 다음과 같다:

과정을 요약하자면,

1. 지정한 윈도우 크기의 2배 크기의 벡터가 one-hot encoding으로 표현되어 input으로 들어간다.

2. 각각의 input마다 첫 번째 가중치 W와 곱해지고, 그 평균을 산정하여 M 벡터로 지정한다.

3. 이 벡터는 두 번째 가중치 W'와 곱해져 softmax 함수를 거쳐 y^벡터가 지정된다.

4. 최종적으로 cross entropy 함수로 최종 중심 단어가 무엇인지 예측할 수 있게 된다.

이때 마지막에 cross entopy 함수를 이용해 loss를 계산하는 과정에서 Gradient Descent를 사용해 계속 업데이트해간다.

2. Skip-gram

Skip-gram은 중심 벡터를 통해 맥락 벡터들을 예측하는 알고리즘이다. Skip-gram의 전체적인 구조는 다음과 같다:

여기서의 출력 벡터는 CBOW의 입력 벡터와 마찬가지고 크기가 윈도우 크기의 2배이다.

이외 다른 계산 방식은 위의 CBOW의 계산 방식과 순서만 다르고, 이외의 것들은 같다.

해당 강의에서는 Skip-gram 알고리즘 기반의 Word2Vec을 사용한다.

3. Optimization of Word2Vec

기존의 Gradient Descent 방식은 계산하기에 비용이 너무 많이 든다. 이를 보완하기 위해 Stochastic Gradient Descent (SGD) 기법을 적용한다.

그러나 위 이미지와 같이, Word2Vec의 입, 출력으로 사용되는 one-hot encoding의 경우, 너무 sparse 한 vector라 SGD를 적용하기 비효율적이라는 문제가 있었다. 0에서의 gradient를 계산하면 항상 0 일 텐데, 그것을 무시하고 계속 계산하는 것은 불필요한 짓이기 때문이다. 이를 위해 negative sampling이라는 방법을 사용한다.

4. Negative Sampling

Negative Sampling의 중심 아이디어는 다음과 같다:

주변 단어들을 긍정(positive), 랜덤으로 샘플링된 단어들을 부정(negative)으로 레이블링 하여 이진 분류 문제를 위한 데이터셋을 만들어 이진 선형 회귀를 학습시킨다.

예시

예를 들어, 이전 블로그에 썼던 'The fat cat sat on the mat'라는 예시를 들어 보자.

Skip-gram 방식은 하나의 중심 단어로부터 맥락 단어들을 예측하는 방식이지만, negative sampling을 사용하면 positive과 negative 단어들을 sampling 해야 한다.

위 사진과 같이, 지정된 window 내의 단어들을 sampling 한 것을 positive sampling, 단어 corpus 내의 단어들을 random 하게 sampling 한 것을 negative sampling이라고 한다. positive sampling은 label을 1로, negative sampling은 0을 label로 갖는다.

이후, 위와 같이 해당 pair을 두 개의 입력으로 선정하는데, 입력 1은 중심 단어의 embedding layer(고정)로, 입력 2는 해당 단어들의 embedding layer로 지정한다. 두 단어들은 모두 한 단어 corpus 내에서 나온 단어들이기 때문에 embedding layer의 크기는 같다.

위는 해당 embedding layer의 과정을 거쳐 최종적으로 완성된 layer이다.

이후, 중심 단어와 주변 단어의 내적값을 이 모델의 예측값으로 하고, 레이블과의 오차로부터 역전파하여 중심 단어와 주변 단어의 임베딩 벡터값을 업데이트해 나간다. 학습 후에는 좌측의 임베딩 행렬을 임베딩 벡터로 사용할 수도 있고, 두 행렬을 더한 후 사용하거나 두 행렬을 연결(concatenate)해서 사용할 수도 있다고 한다.

수식

예시를 통해 알아본 negative sampling을 수식으로 나타내면 다음과 같다:

가운데 -를 기준으로 앞, 뒤 2개의 요소를 나눠보고, 그에 대해 분석해 보자.

1. 첫 번째 요소

이 수식은 center word와 window 내의 word의 내적값, positive sampling을 뜻한다. 이 내적값을 다시 sigmoid function을 통해 0과 1 사이의 값으로 표현하는데, 이 값이 최대화시키는 것이 목표이다.

2. 두 번째 요소

이 수식은 random 하게 샘플링한 단어와 중심 단어의 내적값, negative sampling을 뜻한다. 이 내적값 또한 sigmoid funciton을 통해 0과 1 사이의 값으로 표현하는데, negative sampling 값이므로, 이 값을 최소화시키는 것이 목표이다.

sigmoid function은 다음과 같다:

위 그래프에서 보다시피, input 값이 음수일 경우, 상대적으로 작은 값이 출력되고, 양수일 경우 상대적으로 높은 값이 출력되므로, negative sampling에서는 input 값을 음수로, positive sampling에서는 input 값을 양수로 설정했다.

최종적으로 정리하면, negative sampling의 목적은 실제 window 내의 단어가 나올 확률을 최대화시키고, window 외부의 랜덤한 단어가 나올 확률을 최소화시키는 것이다.

Co-occurrence matrix

Skip-gram에서는 count-based co-occurrence matrix를 사용한다.

1. Window based co-occurrence matrix

Window based co-occurence matrix에서는 한 문장을 기준으로 윈도우에 각 단어가 몇 번 등장하는 지를 세어 matrix를 구성 한다.

해당 matrix를 통해 syntatic, semantic 정보를 얻을 수 있다는 장점이 있다.

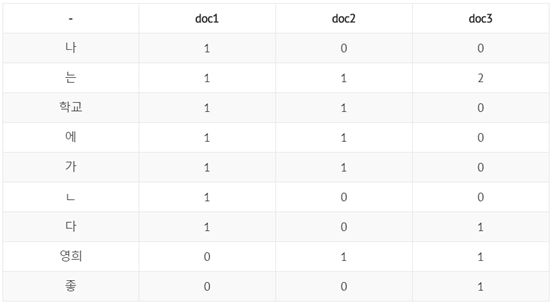

2. Word-document matrix (단어-문서 행렬)

Word-document matrix는 한 문서를 기준으로 각 단어가 몇 번 등장하는 지를 세어 matrix를 구성한다. 문서에 있는 많은 단어들 중 빈번하게 등장하는 특정 단어가 존재한다는 것을 전제한다. (ex. 문서 간 유사도 측정 , tf-idf 등)

그러나 이와 같은 count-based matrix는 단어 양에 따라 vector양 또한 증가한다. 그래서 SVD 또는 LSA 등을 이용하여 차원을 축소시킨 후 사용한다. 이는 대부분의 정보를 작은 차원의 행렬안에 포함시킬 수 있는 결과를 낳는다.

3. SVD (Singular Value Decomposition)

추후 수정

GLOVE (Global Vectors for Word Representation)

1. 원리

지금까지 count-based와 direct-prediction 방식을 모두 살펴보았다.

위 사진에 나와있듯이, Co-occurence matrix와 같은 Count-based 방식은 빠른 훈련이 가능하고, 통계적으로 활용이 가능하다는 장점이 있지만, 단어 간 유사 관계도를 파악하기 어렵고, 많이 등장하는 단어에 너무 큰 가중을 부여한다는 단점이 있었다.

반대로, Word2Vec와 같은 Direct prediction 방식은 높은 수준의 성능을 보이고, 단어 유사 관계의 복잡한 패턴을 찾을 수 있다는 장점이 있지만, 말뭉치 크기가 성능에 영향을 미치고, 통계적으로 사용하기 비효율적이라는 단점이 있었다.

위 기법들의 장점만을 갖춘 기법으로, GLOVE라는 새로운 방법이 등장했다.

GLOVE의 기본적인 아이디어는 다음과 같다:

- 임베딩된 단어 벡터 간 유사도 측정을 수월하게 하면서 (word2vec의 장점)

- 말뭉치 전체의 통계 정보를 반영하자 (co-occurrence matrix의 장점)

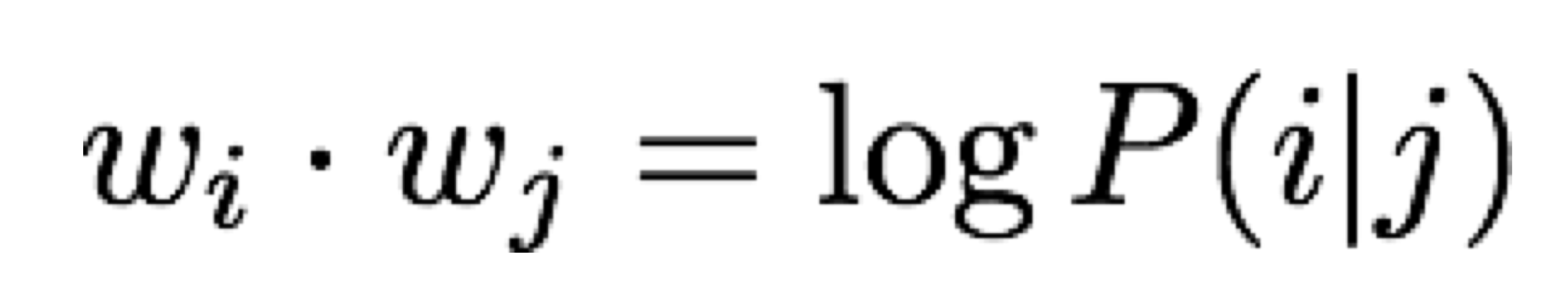

GLOVE의 목적함수는 두 단어 벡터의 내적이 동시 발생 확률에 대한 로그를 나타내는 것이다. 수식으로 표현하면 다음과 같다:

다음 예시를 활용해서 GLOVE의 목적함수를 정확하게 정의해보자.

다음 노트를 순차적으로 따라가면 이해될 것이다.

이렇게, 우리는 두 단어 벡터의 내적이 동시 발생 확률에 대한 로그를 나타내는 것 이라는 목적을 달성했다.

2. 결과

결과는 다음과 같다:

결과를 보면 frog와 비슷한 동물들을 추출해 내는 것을 볼 수 있다.

Evaluation of word vectors

다음은 단어 임베딩 모델들을 어떤 방식으로 평가할 수 있는지에 관해 설명한다.

평가 방식은 내적, 외적의 두 가지 평가 방식으로 나뉜다.

- Intrinsic (내적) 평가 방식의 특징

- 구체적인 subtask (단어 간의 유사성 판단 등)에 대해 평가한다.

- 계산 속도가 빠르다.

- 해당 시스템을 이해하기 좋다.

- 내적 평가 방식은 현실에서 해당 시스템이 유용한지 판단할 수 없다는 단점이 있다.

- Extrinsic (외적) 평가 방식의 특징

- 현실에서 해당 시스템을 적용시켜 판단한다.

- 계산 속도가 느리다.

- 해당 시스템이 문제인지, 다른 시스템과의 상호작용이 문제인지 알기 어렵다.

1. Extrinsic word vector evaluation

실제 현실 문제 (real task)에 직접 적용하여 성능을 평가하는 방식이다. Glove는 외적 평가에서 좋은 성능을 보인다.

GloVe는 거의 모든 외적 평가에서 좋은 성능을 보였다.

2. Intrinsic word vector evaluation

구체적인 방법으로는, word vector analogies라는 방법이 있다. (analogy는 유사를 의미한다)

예를 들어, 다음과 같은 예시에 대해 예측을 할 수 있는지 여부이다.

ex) man:woman :: king: ?

식으로 보면 다음과 같이 d를 찾는 문제라고 정의할 수 있다.

이러한 내적 평가의 예시로는 semantic (의미) 방식과 syntatic (순서)방식이 있다.

- Semantic

- Syntatic

Dimension, corpus size 등을 다르게 하면서 여러 임베딩 모델에 대해서 analogy 분석을 진행해본 결과, GloVe는 좋은 성능을 보였다.

3. Another Intrinsic word vector evaluation

이번에는 인간 판단 (human judgements)에 따른 word vector distances와 단어 벡터 간 코사인 유사도를 계산한다.

다음은 WordSim353이라는 데이터셋이다.

GloVe는 이 평가 방식에서도 높은 성능을 보인다.

4. Word senses and word sense ambiguity

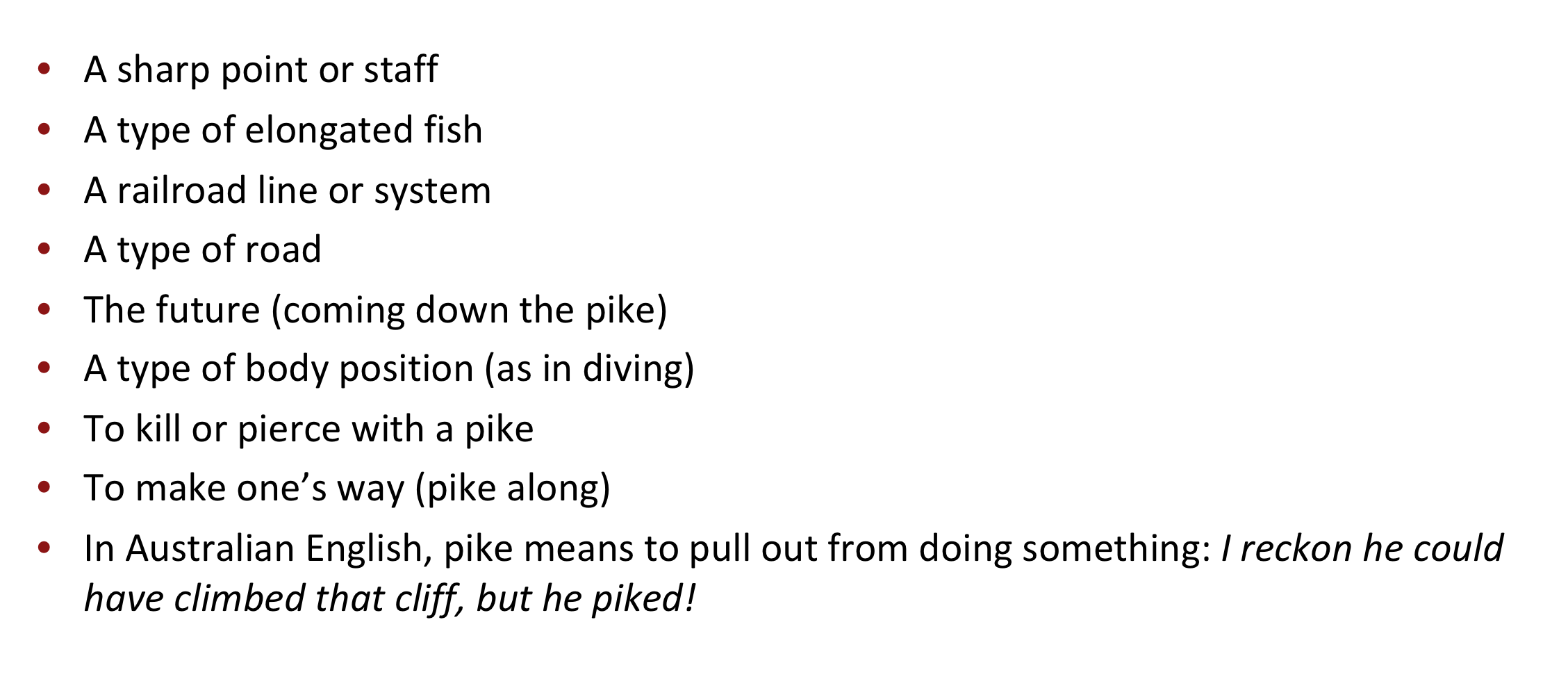

한 단어가 다양한 의미를 내포하는 경우, 어떻게 정의할 수 있을까 ?

다음 pike라는 단어의 예시를 들어 보자.

이 문제는 어떻게 해결할 수 있을까 ?

1. Improving Word Representations Via Global Context And Multiple Word Prototypes (Huang et al. 2012)

특정 단어의 윈도우들을 클러스터링한 후, 단어들을 bank1, bank2, bank3 를 중심으로 다시 임베딩한다.

그러나 이 방법은 부정확하므로 많이 쓰이지 않는다.

2. Linear Algebraic Structure of Word Senses, with Applications to Polysemy

이 방법은 단어가 여러개라도 한 단어 당 한 vector만을 보유하게 하는 경우이다. 이 방식에서, 모든 의미의 vector에 대한 평균 vector 만을 사용한다.

이렇게, 최종적으로 Word2Vec, Co-occurrence matrix, GloVe와 이에 대한 평가 방식에 관해 알아보았다.