IDAS: Intent Discovery with Abstractive Summarization

요즘 New Intent Discovery (NID) 태스크에 빠져있다.

지난번에 MTP-CLNN 논문을 읽고, "프롬프트로 발화에 대한 intent를 생성하고, 그걸 기반으로 clustering 하면 unsupervised 방식에 있어 좋은 평가를 받을 수 있을 것 같다"라는 생각으로 관련 논문들을 찾아보았다.

그중, NLP4CONVAI@ACL 2023에 게재된 논문과 내가 생각한 아이디어가 일치하는 것이 있어 읽어보게 되었다.

조금만 더 일찍 생각할걸

Abstract

Method

- 추상적인 summary에 기반한 utternace들을 clustering 하는 것이 기존의 intent discovery 방법들보다 뛰어날 수 있음

- IDAS: LLM에 prompting을 통해 발화의 label을 생성하는 방식으로 Intent Discovery 진행

- unsupervised, semi-supervised와 모두 비교

Introduction

- (utterance, intent)로 fine-tuning 하는 건 time-consuming, expensive 함 → 명시된 utterance를 사용하는 것이 아닌, 그냥 unlabeled utterance 들을 cluster 하는 방식

- 이전 연구들과는 달리, fine-tuning을 하지 않는 pre-trained encoder를 사용해서 발화들을 설명을 통해 요약 생성 → 텍스트 공간에서 더 가까이, 또는 멀리 위치하도록 함

- 여기서의 요약 = labels → essential 한 정보들만 모아 놓음

- 가정: 이렇게 요약으로 만들어진 label이 intent를 더 잘 나타내고 non-intent related information이 vector similarity에 영향을 주는 것을 막음

ICL

- LLM이 소량의 (input, output) 예시들과 task가 함께 주어진 프롬프트를 사용하는 방식

- 그러나 unsupervised에는 이 발화들이 labeling이 안돼있음

IDAS

ICL (In-Context Learning)을 통한 label generation을 먼저 진행

- 발화에 대한 1차적인 clustering

- K-means clustering을 통해 cluster centroid에 가까운 발화 prototype 몇 개 선택

- 이에 대해 LLM이 짧은 label을 생성하도록 함

- prototype에 포함되지 않은 발화 x에 대해, prototype에서 해당 발화와 비슷한 intent를 가진 n개의 발화를 뽑아 ICL 진행

- 이렇게 생성된 label들에 오류가 있을 수도 있으니 frozen pre-trained encoder를 통해 single vector representation과 결합

- latent 추측을 위해 K-means clustering 진행

Methodology

Task 정의

- {(xi, yi) | i = 1…N}를 N개의 발화의 dataset이라고 정의.

- intent가 없는 발화들 Dx = {xi | i = 1…N}에 대해 인코더 E를 사용하여 E(xi)를 통해 y를 유추하는 것

Overview

- Initial Clustering

- K-means clustering해서 prototype 확보

- Label Generation

- instruction과 prototype 한 개씩을 prompt로 LLM에 넣어 label을 생성

- 생성된 (prototype xi, label yi)는 M이라는 집합에 저장됨

- prototype에 포함되지 않은 발화들 xk(k=1…prototype 개수)와, M 집합에 속한 데이터들에 대한 유사도를 계산해 top N개를 뽑아 (inst, (xi, li), xk)로 프롬프팅 → 새롭게 생성된 (xk, lk)도 M 집합에 포함

- instruction과 prototype 한 개씩을 prompt로 LLM에 넣어 label을 생성

- Label & Utterane Encoding

- 위 과정을 모두 거쳐 생성된 utterance와 label을 frozen pre-trained encoder를 이용해서 single vector representation으로 만듦

- 최종적으로 결합된 rerpresentation에 K-means를 사용해서 intent를 추측

1. Initial Clustering

목적: 다양한 프로토타입 확보

- 모든 unlabeled data는 인코더로 인코딩됨

- K-means를 통해 K개의 cluster로 분할

3. 각 cluster의 cluster centroid와 가까운 해당 cluster의 data point를 prototype(pi)으로 지정

2. Label Generation

목적: 프로토타입에 속하지 않은 unlabeled data에 대한 최대한 정확한 label 생성

- 프로토타입 labeling

- 각 pi 들을 instruction과 함께 LLM에 넣음

- instruction: “describe the question in a maximum of 5 words”

- 이렇게 생성된 것이 label li로 사용됨

- 생성된 xi li를 집합 M에 넣음

2. ICL을 통한 label generation

- 프로토타입에 속하지 않은 data에 대한 label 만들기

- 다음 3가지 항목으로 구성

1. instruction: “classify the question into one of the labels”

2. n개의 (utterance, label)을 샘플링

3. labeling 하고자 하는 발화

- n개의 (utterance, label)을 샘플링할 때, KATE 사용 (KATE는 Knn-Augmented in-conText Example selection의 줄임말임)

- label을 구하고자 하는 발화와 집합 M에 속한 발화들에 대해 similarity를 계산하고

- n개의 가장 비슷한 발화 선택 → N_n(x)라고 함

- 생성된 xk, lk를 M이라는 집합에 넣음

- instruction 관련 유의사함

- 여기서 ”classify”라는 단어를 써도, 새로운 의도를 가진 발화가 입력으로 오면, 새로운 label 생성도 가능함

- 동일한 의도를 가진 발화들 사이에서 생성되는 레이블의 변동을 최소화할 수 있음

3. Encoding Utterances and Labels

step2까지 마치면, 모든 발화들은 label을 가지고 있음

목적: 발화와 label을 이용해서 하나의 표현으로 만들자

- 결합된 vector 표현 φ_AVG를 다음과 같이 계산

- SMOOTHING 과정: 인코딩 된 발화와 label의 결합된 표현을 더욱 정제하는 과정

1. 개별 발화에 특정된 피처를 억제

2. 발화 간 공통 특징을 강조

- 발화 x와 가장 유사한 n’ 개의 발화와 평균 벡터 인코딩의 평균을 구함

- 이렇게 하면 x와 유사한 발화들의 특징을 포함해서 더 일반화된 특징을 얻을 수 있음

- n’의 결정은 silhouette score으로 함

- 실루엣 점수는 각 샘플이 얼마나 잘 군집화됐는지 나타내는 척도로, 이를 최대화하는 n’ 값 선택

4. Final Intent Discovery

- 최종적으로 발화와 label을 결합하여 n개의 φ_SMOOTH(x, l)을 만들었음.

- 이후, K-means를 실행해서 intent discover

- (뒤에 implementation에서 나오는 내용): n’을 결정할 때 n’=5부터 45까지 증가시키면서 생기는 φ_SMOOTH을 기준으로 실루엣 점수를 계산 → 실루엣 점수가 가장 높은 n’을 정함

Experimental Setup

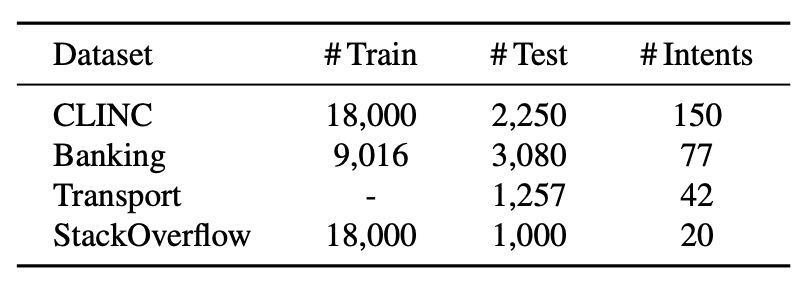

1. Datasets

CLINC, BANKING, StackOverflow, private dataset from a transportation company

2. Baselines

- MTP-CLNN

- 다만 MTP-CLNN은 CLINC 데이터셋을 기반으로 train 됐으므로, CLINC으로 비교하는 건 좋지 않음

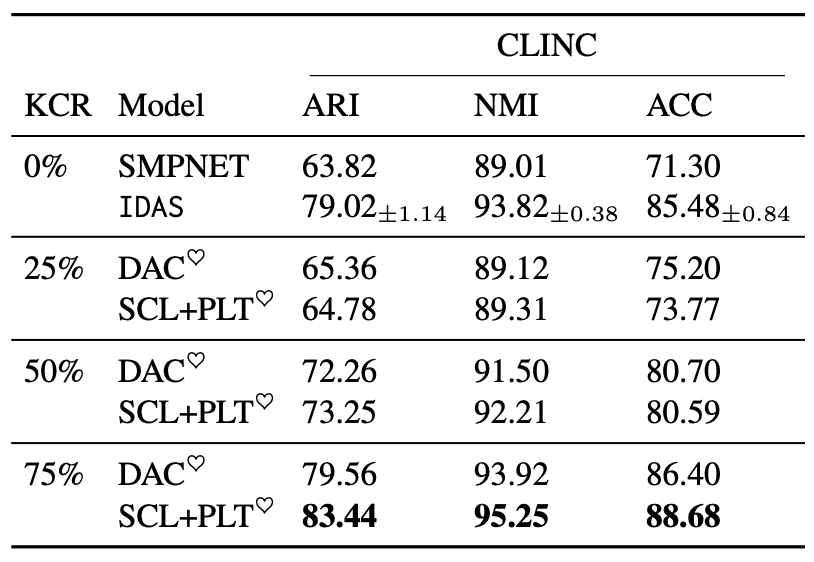

- CLINC에 한해서는 semi-superivised 방식인 DAC, SCL+PLT 방식과 비교

- Known Class Ratio를 25%, 50%, 75%로 증가시키면서 사용

3. Evaluation

- ARI, NMI, ACC + Hungarian metric 사용

- IDAS의 label generation 프로세스는 데이터의 순서에 따라 생성되는 Label이 달라질 수 있다고 함

- 발화의 순서에 따라 K-means 결과가 바뀔 수 있음

- 발화의 순서가 바뀌면 초기에 선택되는 프로토타입이나 그 후에 레이블이 부여되는 발화의 순서가 달라질 수 있음

- 발화 순서의 변화가 알고리즘에 얼마나 많은 영향을 미치는지 알아보기 위해 발화의 순서를 섞어서 IDAS의 1-2번 과정을 반복 → 발화의 순서가 초기 조건에 따라 얼마나 안정적인지를 판단하고 변동성 측정 가능

4. Implementation

- Encoder

- MTP-CLNN에 대해서는 MTP 사용

- SCL+PLT, DAC에 대해서는 SBERT 사용

- Language models and prompts

- LM

- GPT-3 사용

- LM의 temp=0: 같은 intent에 대해 여러 variation 있으면 안 되기 때문

- Prompts

- banking, chatbot, transport에 대해서는 “Describe the domain question in a maximum of 5 words”로 프롬프팅

- StackOverflow는 topic 관련이니까 “Identify the technology in question”으로 프롬프팅

- LM

- Nearest Neighbor retrieval

- similarity function으로는 코사인 유사도

- n=8: 후 실험에서 더 키울수록 변화 없었음

- n’ (5~45)은 마지막에 K-means 여러 번 수행 후 평균 실루엣 점수가 가장 높은 값을 선택

Results and Discussion

1. Main results

- Unupervised

- 가능한 label 없음

- model의 cluster 평가할 test set 밖에 없음

- MTP, MTP-CLNN의 encoder pre-training에 unlabeled test set을 학습

- IDAS vs. MTP-CLNN

- 다이아몬드: 원래 방식으로 MTP-CLNN을 pre-trianing

- 스페이드: pre-training 시 unlabeled test data를 같이 넣어 pre-training

- BANKING의 ACC 제외하고는 모든 데이터셋과 매트릭을 능가함

- 분석

- 본 논문 방식에서 IDAS와 MTP-CLNN의 퍼포먼스(스페이드)가 원래 세팅(다이아몬드) 보다 낮음

- 왜냐하면 본 논문 방식에서 훨씬 적은 샘플로 training

2. Semi-supervised

- IDAS는 0%의 KCR(Known Class Ratio) 사용

- IDAS가 25%, 50%의 DAC, SCL+PLT를 모두 능가함

2. Ablations

- Encoding strategies

- 모든 4개의 데이터셋 사용

- 위부터 순서대로: 1. 각각의 발화로만 인코딩, 2. label로만 인코딩, 3. 발화 인코딩과 레이블 인코딩의 평균, 4. 평균 구한 후 스무딩 과정 거침

- 다른 3개가 발화로만 인코딩한 것보다 모든 데이터셋과 평가 매트릭에서 좋은 성능을 보임 → 발화를 요약하는 게 의도 발견 성능 높임

2. Inferring the number of smoothing neighbors

- {5,…,45}에 포함되는 n’을 선택해서 silhouette score 계산

- [표] - n’ 값에 따른 실루엣 스코어

3. Random vs. Nearest Neighbor

- IDAS는 KATE를 사용해서 x와 근접한 n개의 데이터 N_n(x) 선택

(KATE는 Knn-Augmented in-conText Example selection의 줄임말임)

- KATE의 사용이 ICL을 사용하지 않는 것에 비해 높은 스코어 향상 보여줌

- n=1, 2, 4와 같은 작은 변화를 쓰는 건 크게 스코어 차이가 없음

- n=8, 16에서 스코어가 좋게 나타남

- 이전 논문) n을 일정 이상 키워봤자 퍼포먼스에 크게 변화 없다는 연구 → n=8로 하기로 함

4. Overestimating the number of prototypes

- ground truth의 2배로 clustering 한 게 (K X 2) 성능 크게 저하되지는 않았음

Conclusion & Limitations

Conclusion

IDAS: frozen pre-trained encoder 사용 → summarize utterances into intents

unsupervised encoder를 training 하는 것보다 LLM 이용해서 labeling 하는 게 나음

Limitations

- K-means 수행 시 K 예측하는 부분이 없음: 항상 ground truth의 2배로 시작함

- 그러나 실제로는 ground truth 모르기 때문에 추정하는 방법 생각해야 함

- 그러나 2배로 overestimating 하는 게 크게 성능저하 시키지 않았음

- GPT-3 만 사용했는데, 다른 모델들 사용하는 게 좋을지