[CS224N] 4. Syntactic Structure and Dependency Parsing

4번째 강의는 문장에 대한 분석 방법에 다룬다. 특히 Dependency Parsing 기법에 대해 설명하는데, 그동안의 방식들과 현대의 neural dependency parsing 방식에 대해 소개한다.

1. Two views of linguistic structure

문장의 구조를 파악하는 것은 두 가지가 있는데, 하나는 Constituency parsing, 다른 하나는 Dependency parsing이다.

간단하게 Consitituency parsing은 문장의 구성요소를 통해 문장 구조를 분석하는 방법이고, Dependency parsing은 단어 간 의존 관계를 통해 구조를 분석하는 방법이다. 조금 더 깊게 들어가 보자.

1. Constituency Parsing: Context-Free-Grammars(CFGs)

Context free grammars란, 예전에 영어학원에서 문장 분석할 때 단어 밑에다가 품사를 써놓는 방식을 생각하면 편하다.

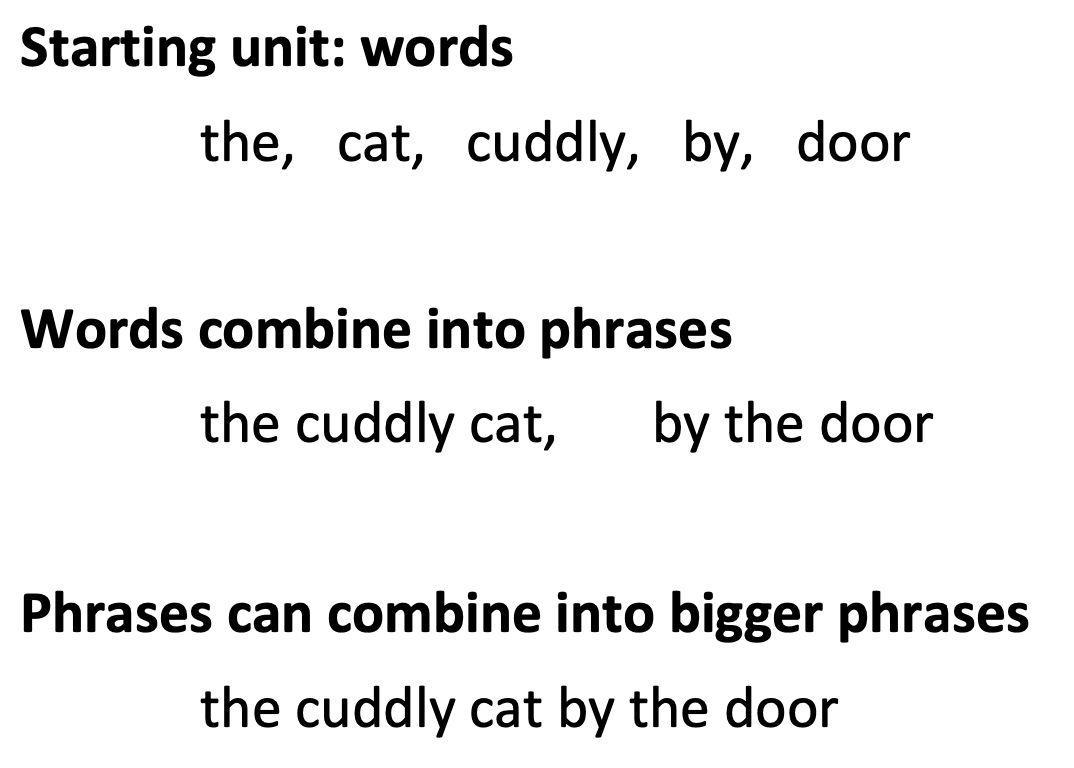

아래처럼 단어로 시작하면, the-Det, cat-N, cuddly-Adj, by-P, door-N 이 된다.

이 단어들을 절로 바꾸면 the cuddly cat - Noun phrase, by the door - Preposition phrase가 된다. 그리고 이런 절은 결합되어 더 큰 절을 만들 수도 있다.

그러나 이 방법은 문장 구조를 이해하기 위해 분명 한계가 있다. 바로 의미 구조를 파악하지 못한다거나, 다른 단어와의 관계를 알 수 없다는 점인데, 가령 문장에서 대명사가 한 명사를 나타내려는 경우가 이에 해당한다.

이를 위해 Dependency Parsing을 사용한다.

2. Dependency Parsing

Dependency Parsing의 가장 큰 장점은 이것이 어떤 단어가 다른 어떤 단어에 의존하는지 보여준다는 점이다.

예시로, 다음 문장을 생각해 보자:

Bills on ports and immigration were submitted by Senator Brownboack, Republican of Kansas.

이 문장을 dependency로 나타내면, 다음과 같이 tree 형태로 그려볼 수 있다.

강의에서 설명한 몇 가지 dependency structure을 나타내는 규칙을 보면 다음과 같다:

- Pseudo Element인 ROOT를 추가하여 모든 성분의 최종 head가 ROOT가 되도록 한다.

- 모든 성분은 단 하나의 head만 가진다. 그러나 한 head는 많은 dependency structure을 가질 수 있다.

- 강의에서는 모든 전치사를 case로 간주한다. 이는 같이 오는 명사의 dependent로 포함해 버리는 것이다. 예시로, 그림에서 Brownback의 dependency 중 하나로 by를 볼 수 있다.

- 화살표는 head에서 dependency로 그린다. 물론 이건 그리는 사람 마음이지만, 강의에서는 통일성을 위해서 이런 순서로 그렸다고 한다.

참고로 위 그림에서 선 위에 쓰여있는 건 두 단어 간의 관계를 나타내는 것이다.

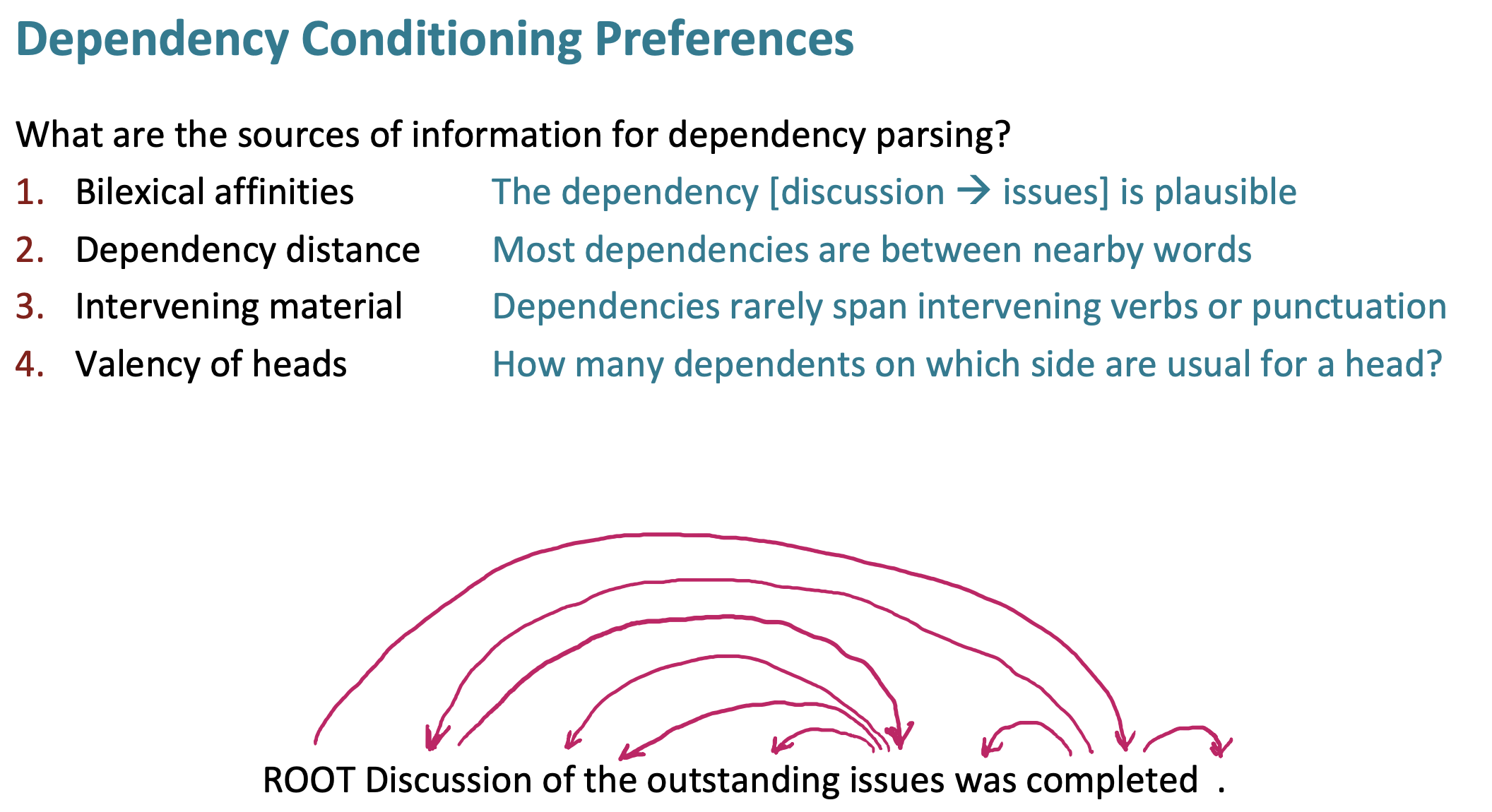

Dependency Conditioning Preferences

그렇다면 dependency parsing의 sources of information으로는 무엇이 있을까?

- Bilexical effects: 두 단어간의 의미 관계 정보이다.

- Dependency distance: 보통의 dependency들은 인접한 두 단어 사이에서 일어난다고 한다.

- Intervening material: 보통 dependency 관계는 동사나 구두점을 넘어서 생기지 않는다고 한다.

- Valency of Heads: 이는 Head 가 무엇이냐에 따라서 그것이 갖는 dependents (종류와 방향 - 왼, 오)에 대한 패턴이 어느 정도 존재한다는 것이다. 예시로, 관사 the는 dependent 가 없는 반면, 동사는 많은 dependents를 갖는다. 영어에서 Noun 도 많은 dependents 를 갖는데, 형용사 dependent는 (주로) 왼쪽에 위치하고, 전치사 dependent 는 오른쪽에 위치한다.

Projectivity

projective parse의 정의에 의거하면 다음과 같은 원칙들이 지켜져야 한다.

- dependency arc들이 나열될 때, 각 arc들이 서로를 교차하면 안 된다.

- CFG에 해당하는 dependency들은 반드시 projective 해야 한다. 즉, 반드시 하나의 ROOT가 있어야 한다.

대부분의 문장 구조들이 이렇게 projective 하지만, 가끔 주어와 동사의 위치가 변하는 구조를 위해서 non-projective 구조를 허용하기도 한다. 예시로 다음 문장 2개를 보자.

- Who did Bill buy the coffee from yesterday?

- From Who did bill buy the coffee yesterday?

이렇게 두 번째 문장과 같은 경우, non-projective 한 구조가 필요하기도 하다.

2. Methods of dependency parsing

Dependency parsing의 방법으로는 DP, Graph alogrithms, Constrain Satisfaction 등 다양한 방법이 많지만, 본 강의에서는 가장 유용하게 사용되는 Transition-based parsing에 관해 다룬다.

Greedy transition-based parsing

먼저 이 greedy가 붙은 이름을 보아, 각 상황에 따라 가장 최고의 이익을 취하는 방법론이라는 것을 생각해 볼 수 있다.

이 알고리즘을 살펴보면, 다음과 같은 구성 요소가 있다:

- 문장의 모든 단어는 stack과 buffer에 존재한다.

- 가능한 동작은 3가지이다.

- Shift: buffer의 top word를 stack의 top position으로 이동한다.

- Left-Arc: stack에서 top 2 words를 골라 dependency를 만들고 (<-), dependent를 제거한다.

- Right-Arc: stack에서 top 2 words를 골라 dependency를 만들고 (->), dependent를 제거한다.

- start/end condition

- start: buffer에는 root 단어 1개, stack에는 나머지 모든 단어들이 존재한다.

- end: buffer에는 root 단어 1개, stack은 empty

그래서 Automatic Parser란 step2의 동작 중 하나를 자동적으로 선택하는 classifier이다.

그럼 Automatic Parser가 3가지 step 중 자동적으로 선택하는(classification 하는) 방식은 무엇일까? 답은 머신러닝을 통해 classifier를 훈련시키는 것이고, 이 방법론을 MaltParser이라고 한다.

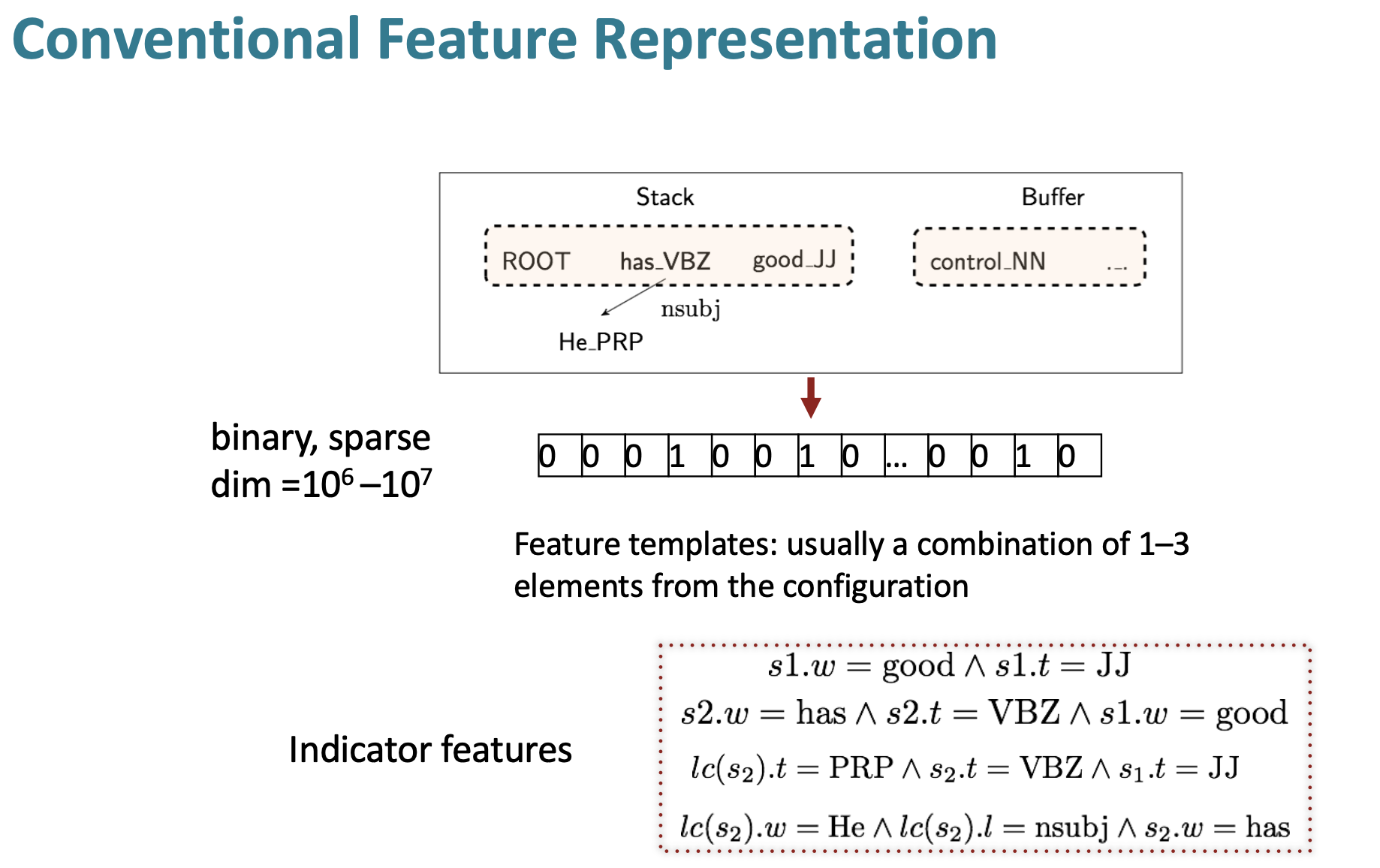

MaltParser에서는 top of stack word, 그 단어의 품사(POS), first in buffer word, 그 단어의 품사(POS) 등을 input으로 classifier에 넣어 각 단계에서 수행할 optimal 한 parsing 전략을 위해 사용된다. MaltParser는 정말 빠르고 시간 대비 높은 정확도를 가지고 있어서 web 같은걸 parsing 할 때 유용하다고 한다.

그러나, 위에서 설명했다시피 MaltParser는 feature들을 one-hot vector로 인코딩하고, 그걸 concatenate 해서 사용하기 때문에 차원이 매우 커진다는 문제점이 있었다. 이를 해결하기 위해 Neural Network를 사용하게 되었다.

3. Neural dependency parser

- Sparseness : 단어를 bag of words로 나타내면 dimension 이 엄청나게 커지고, 여기에 다른 것들도 합쳐서 각 단어에 대한 feature를 만드는데 (feature table 있음), 그럼 그 feature는 엄청 sparse 해진다.

- Incomplete : configuration에서 본 적이 없는 rare words 나 rare combination of words 이 있을 경우, feature table에 존재하지 않기 때문에 완전하지 않은 모델이 된다.

- Expensive computation : 1번과 관련된 문제이다. 엄청나게 방대한 feature space를 가지고 이를 hash map으로 표현한다 ( feature id : weight of the feature) 그런데 하도 방대한 feature space에서 검색을 해야 되다 보니 이 자체만으로 엄청나게 많은 시간을 잡아먹게 되는 것이다.

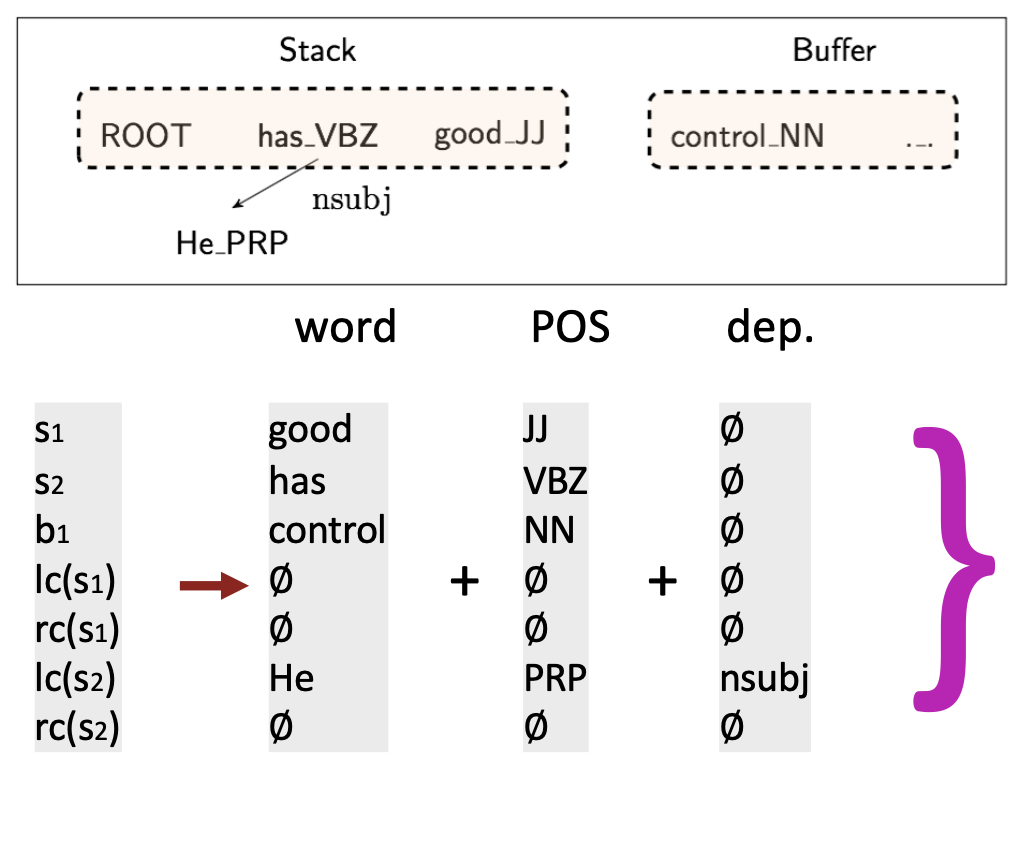

1. First Win: Distributed Representations

이를 해결하기 위해, 다음 방법들을 도입했다:

- 각 단어들을 d-dimensional 벡터로 표현한다. (ex. word embedding) -> 비슷한 단어는 가까운 벡터 거리를 가지게 된다.

- 품사와 dependency label 또한 벡터로 표현하여 더한다.

이렇게 사진과 같이, 단어와 품사, dependency 모두를 벡터화시켜 더해, 하나의 긴 벡터로 만든다.

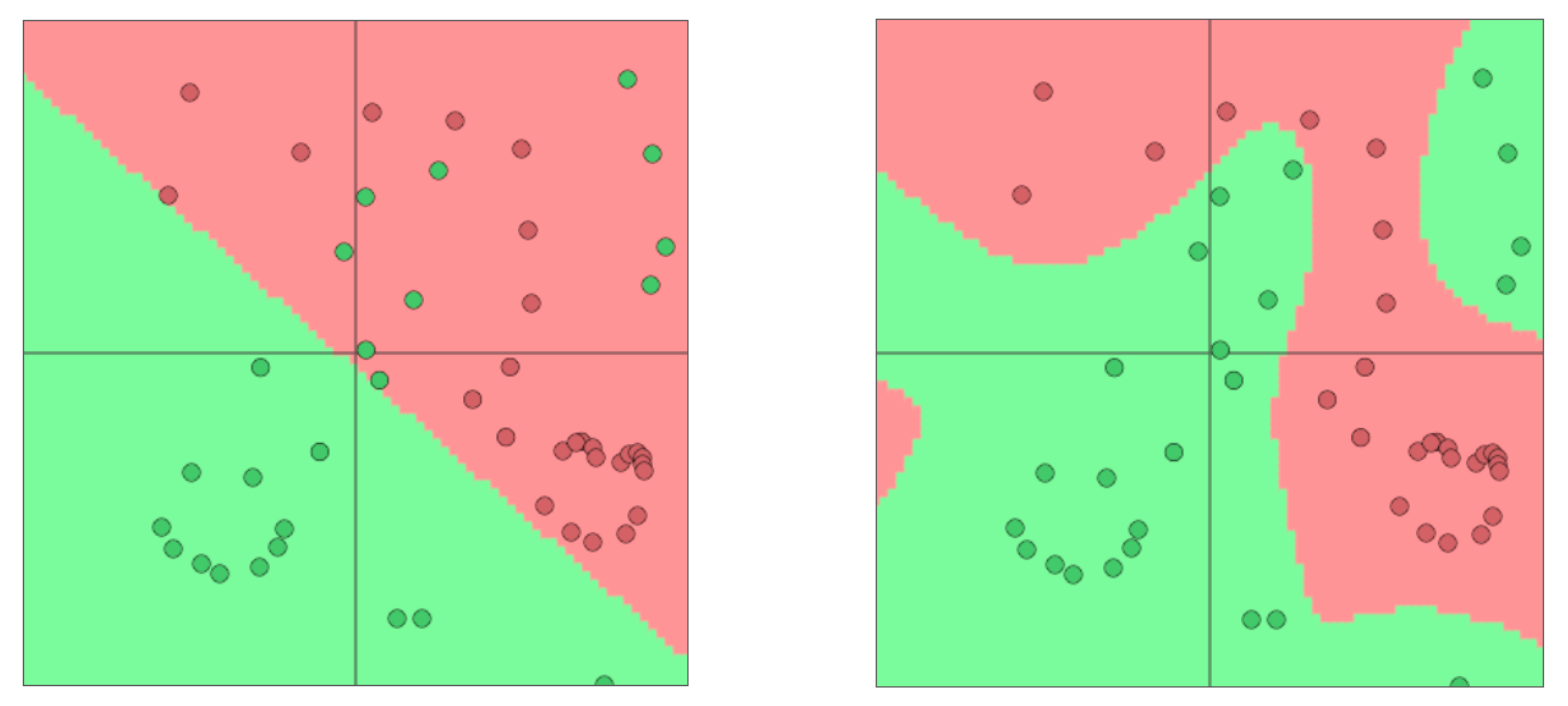

2. Second Win: Deep Learning classifiers are non-linear classifiers

softmax와 같은 비선형성 분류기를 통해 linear boundary가 아닌 non-linear boundary를 제공함으로써 더 높은 정확성을 가질 수 있었다.

그래서 위 방법들을 기반으로 만들어진 neural network multi-class classifier는 다음과 같다:

여기서 이루어지는 작업은, hidden layer에서 역전파(backpropagation) 알고리즘을 통해 가중치를 업데이트하면서, 입력 데이터를 특징 공간(feature space)상에서 재배치하는 것이다. 이 과정은 각 클래스 간의 결정 경계를 명확하게 해서 softmax 함수를 통한 분류가 더 효율적으로 이루어질 수 있도록 한다.

4. More about neural networks: Dropout & Non-linearities

1. Dropout

원래 머신러닝에서 정규화는 overfitting을 막기 위해 사용됐는데, 이제 정규화는 feature co-adaptation을 막는 것이 주 목표가 된다. 즉, 한 특정 feature만 유별나게 사용될 수 없도록 막은 것이고, 이를 Dropout 방식이라고 한다.

그래서 그림에서와 같이, 몇 개의 input을 랜덤 하게 제외시키는 것이다.

2. Non-linearities

sigmoid, tanh, hard tanh 등 중요한 것들이 많지만, neural net에서 가장 흔히 쓰이는 ReLU는 sigmoid 함수에서 ouput이 항상 0과 1이 되어, 많이 반복했을 때 기울기가 소실되는 현상을 막기 위해 고안된 기법이다.

가장 오른쪽에 보이는 것이 ReLU 함수인데, 이렇게 음수 영역에 대해서는 0으로, 양수 영역에 대해서는 그 값을 그대로 반환함으로써 기울기 소실 문제를 완전히 차단했다. 또한, 기존 활성화 함수에 비해 속도가 6배나 빠른 장점도 있다고 한다.

이렇게, Dependency Parser가 어떻게 발전해 왔는지, NeuralNet을 이용한 Dependency Parser가 어떻게 이용되는지에 대해 알아보았다.