-

Abstract

-

Introduction

-

Related Work

-

1. CRS (Conversational Recommender Systems)

-

2. Contrastive Learning

-

Pre-training for Contextual Knowledge Injection

-

1. Contextual Knowledge Injection

-

2. Learning to Explain Recommendation

-

Fine-tuning for Conversational Recommendation Task

-

1. Contextual Knowledge-enhanced Recommendation

-

2. Context-enhanced Response Generation

-

Experiment

-

Conclusions and Future Work

요즘 대화형 챗봇에 관심이 많다.

그런데 chatGPT와 대화를 해 보았을 때, 내가 좋아하는 것이 뭔지 딱 알려주지 않으면 제대로 파악 못하는 경우가 엄청 많다.

이에 대화만으로 나의 선호도를 파악하는 챗봇은 없을까? 관련해서 신박한 알고리즘은 없을까? 에 대해 생각해 보았고, 위 논문을 접하게 됐다. 자세히, 열심히 읽었으니, 읽은 흔적을 첨부해야겠다. (필기 많음 주의)

Abstract

현존하는 대화형 추천 시스템 (Conversational Recommender Systems. 줄여서 CRS라고 부르더라.)은 대화만으로 전체적인 유저 선호도를 알지 못한다. 선호하는 item이 대화에서 나오지 않는다면, 유저 선호도를 분석하는 것에 있어서 어려움이 있는 것이다.

이에 해당 논문은 CLICK을 제안한다. CLICK는 대화 문맥 내에서 '사용자가 선호하는 item'이 등장하지 않았더라도, 대화만을 통해 context-level의 유저 선호도를 파악하는 것이다. 게다가 relevance-enhanced contrastive learning loss를 고안하여 사용자에게 디테일하고 세밀하게 추천한다. (relevance-enhanced contrastive learning loss를 직역해 보니, '특정 요소나 특징을 중요하게 여기는, 인스턴스 간의 상이성을 측정하는 함수' 이다.)

여기서 CLICK은 질문에 대해 댓글로 추천을 해주는 커뮤니티 Reddit의 data를 CRS task에 이용한다.

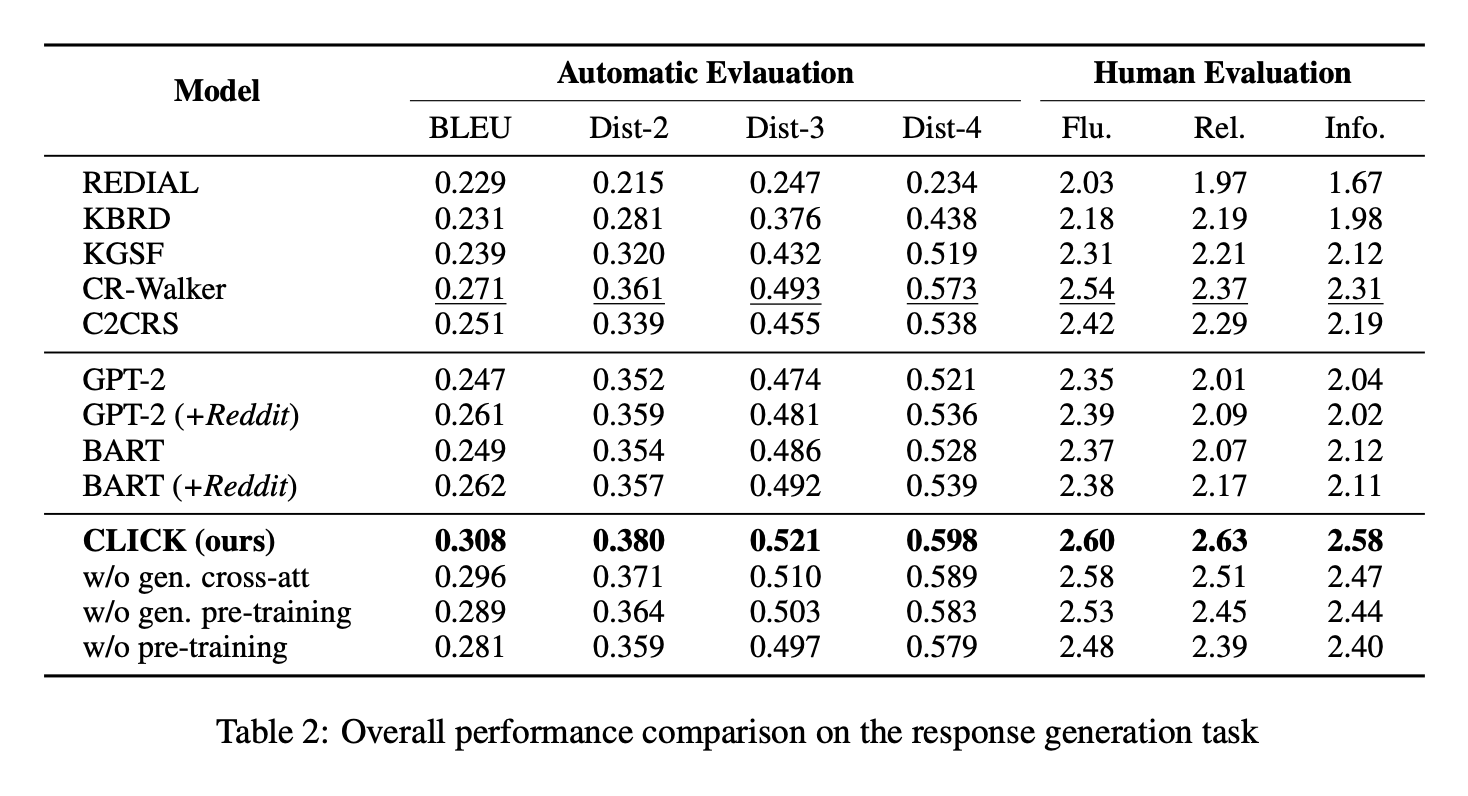

Introduction

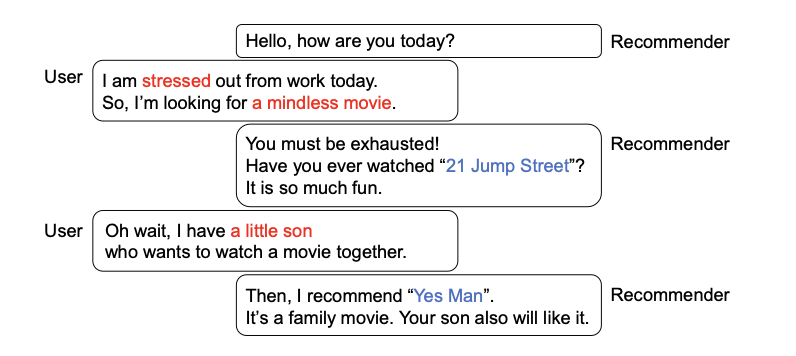

예전 방식인, 클릭이나 구매 데이터로 사용자의 선호도를 파악하는 방식은 선호도의 동적 모델링이 불가하다. (정적으로 그때그때 사용자의 취향을 조사해야 함.) 해당 문제를 CRS가 잡아준다. 아래 그림을 보고 챗봇을 사용해 본 경험을 떠올리면 금방 이해된다.

그러나 생각을 해보면, 유저가 '나는 사과를 좋아해'처럼 대화에서 자신이 좋아하는 item을 노출하는 경우도 있지만, 위 대화와 같이 노출하지 않는 경우도 상당히 많다. 해당 상황에서 사람의 경우에는 계속 대화해 보며 상대방이 어떤 것을 좋아하는지 예상, 거의 확신을 할 수 있는 반면, 챗봇은 알아차리기 어렵다. 이에 CLICK은 문맥에서 선호하는 item을 유추하며, 해당 문제점을 해결하고, 나아가 사용자에게 세밀한 추천을 제공한다.

과연 어떻게 하는 것일까?

우선 CLICK은 knowledge graph(줄여서 KG)와 Reddit data를 이용하여 대화로부터 context-level의 선호도를 알아낸다. 그러나 여기서도 문제가 있다. 바로 데이터들의 modality(양식)이 다르다는 문제이다. 이는 추천된 아이템과 물어보는 text를 짝지어 나열하고, 조금 특별한 contrastive learning을 진행하여 해결한다.

본래 contrastive learning이라고 하면, positive와 negative으로만 나뉘는데, 여기서는 positive끼리의 상대적 연관성을 구한다. 이를 위해 사용되는 것이 relevance-enhanced contrastive learning loss function이다. (해당 함수에 대해서는 뒤에서 더 자세하게 설명된다.) 결과적으로 이것을 사용하면, entity와 context-level의 관점 둘다로부터 추출된 정확한 추천을 제공한다.

Related Work

1. CRS (Conversational Recommender Systems)

앞서 설명했듯이, CRS는 정적인 interaction history보다 동적인 interaction을 더 많이 한다. 이러한 CSR은 다음 2가지로 분류될 수 있다:

- Template-based CRS

- slot-filling method (빈칸에 들어갈 말 찾는 방식)를 사용한다.

- 유연하지 못한 대답이 생성된다는 문제점이 있다.

- Natural language-based CRS

- 유저가 free-text로 요구할 수 있다.

- 외부 데이터로부터 어떻게 정보를 얻어내고, 사용자 선호도를 알아내기 위해 해당 정보를 어떻게 사용할지에 대해 집중한다.

- 외부 데이터가 많아도 free text로부터 유저의 니즈를 포착하는 능력이 부족하다. (KG에 있는 item 항목들만 사용하기 때문)

- 이를 해결하기 위해 contrastive learning approach를 사용함으로써 언급된 item과 text 둘다로부터 유저 선호도를 조사 가능하게 만든 것이 CLICK이다.

2. Contrastive Learning

self-supervised laerning에서 많이 활용되는 방식이다.

특히 multi-modal에서 많이 활용되는데, feature representation을 정제하는 것에 있어서 positive 끼리는 더 짧은 거리를 negative 끼리는 더 먼 거리를 갖게 하는 방식이다.

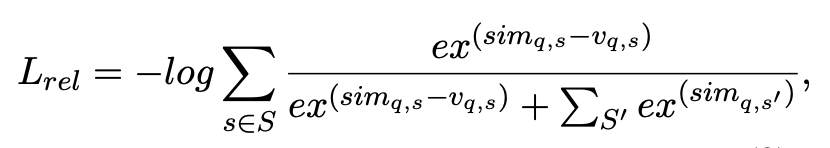

그러나, CLICK에서는 relevance-enhanced contrastive learning loss를 고안하여 각각 다른 상대적인 유사성이 있는 다중 추천 아이템을 고려한다.

Pre-training for Contextual Knowledge Injection

KG는 다음과 같이 정의된다: G = {(e1,r,e2)|e1,e2 ∈ E,r ∈ R}

Reddit data는 다음과 같이 표시된다: D = {(q, s, v, t)}

- q는 질문자의 질문

- s는 추출된 아이템

- v는 item에 대한 연관성 score (Reddit에서의 추천 수)

- (선택) t는 item 추천에 대한 이유

pre-training 단계에서 KG는 KG-encoder에, Reddit data는 text-encoder에 넣고, (위에서 설명했던) relevance-enhanced contrastive learning loss를 사용해서 train 시킨다. 이후, 응답 생성기는 필요에 의거하여 추천을 위한 응답을 내놓는다. 다음 그림을 보면 더 이해가 잘 될 것이다.

1. Contextual Knowledge Injection

다음은 encoding 되는 과정을 설명한다. (위 그림을 보면서 이해하면 더 쉽다)

- s가 KG-Encoder를 거쳐 item embedding인 n_s로 변환된다.

- q를 BERT와 Fully Connected Layer (FCN)에 통과시켜 인코딩 시키고, 그것을 h_q라고 부른다.

hq = FCN(BERT(q))

- 이후 서로 다른 modality를 극복하기 위해 relevance-enhanced contrastive learning loss를 통해 두 modality 간의 일치를 촉진한다.

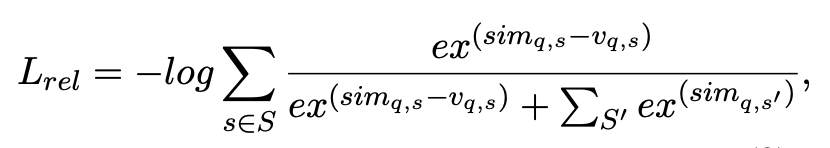

위 그림에서 v_(q, s)는 reddit에서 공감 수, S는 positive set, S'는 negative set을 나타낸다.

sim은 코사인 유사도이다.

전에 설명했던 loss function을 통해 v_(q, s)와의 positive pair는 끌어당겨지고, negative pair는 멀어진다.

이는 pre-trained encoder가 user의 발화로부터 포괄적인 선호를 알 수 있게 한다.

2. Learning to Explain Recommendation

다음은 response, 응답을 만드는 과정이다.

기본적으로 필요한 항목들은 다음과 같다:

- 추천 item

- rationale (이유)

- GPT-2

GPT-2에 item s와 utterance(발화) type을 token embedding layer에 넣고, self-attention(A1) 시킨다.

이후, BERT만 거친 context embedding h_ct와 다음 decoder block에 cross-attention(A2)을 수행한다.

이는 item suggestion 이유를 생성하기 위함이다.

A2 = FFN(MultiHead(O_A1,h_ct,h_ct))

여기서 O_A1은 A1의 output layer이다.

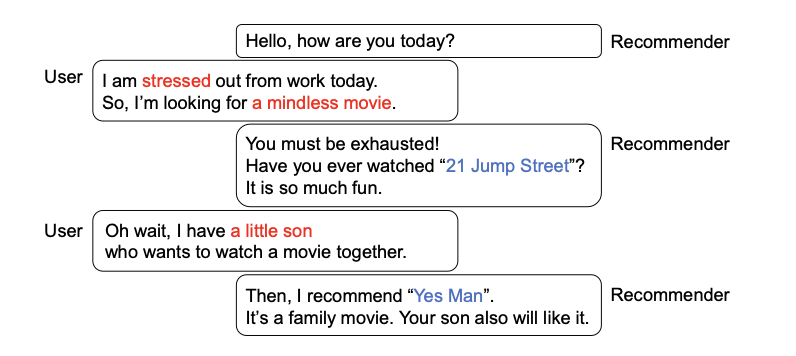

Fine-tuning for Conversational Recommendation Task

여기서는 CLICK의 CRS task 수행을 위한 fine-tuning 전략이 소개된다. 우선 그림은 다음과 같다:

CLICK은 주요 2개의 컴포넌트로 구성되는데, 하나는 대화로부터 유저의 니즈를 알아내는 것이고, 하나는 포착된 유저 선호도로부터 설득적인 말을 생성하는 것이다. CLICK의 목표는 올바른 item s_c를 추천하고, system 응답 y_c를 생성하며, 대화 history C를 이해하는 것이다.

1. Contextual Knowledge-enhanced Recommendation

대화 history C가 text-encoder에 들어가면, context-level의 유저 선호도 p_cl을 얻게 된다.

pcl = FCN(BERT(C))

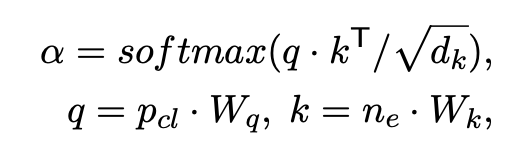

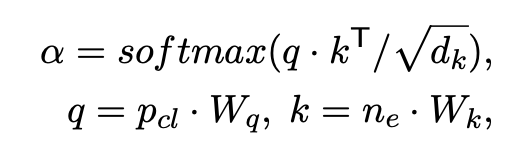

이후, entity-level의 유저 선호도를 알아내기 위해 p_cl과 단어 entity 사이에 cross-attention을 적용한다.

cross-attention score은 다음과 같다:

위에서 W_q, W_k는 weight, n_e는 제공된 entity로부터의 entity embedding이다.

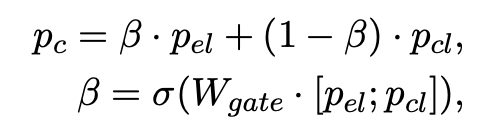

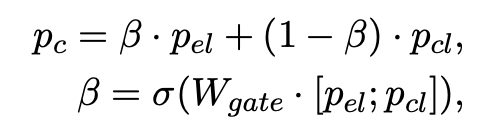

이후, 다음 식을 통해 entity-level의 유저 선호도 p_el를 얻을 수 있다.

이렇게 얻은 p_el과 p_cl을 결합함으로써 최종 유저 선호도를 얻게 된다.

W는 weight, [;]는 concatenation 연산자이다.

2. Context-enhanced Response Generation

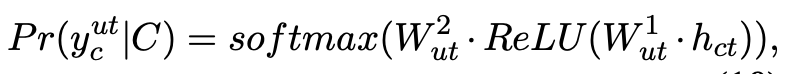

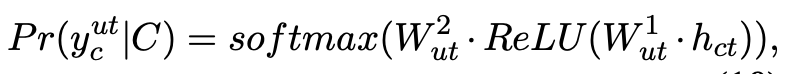

CLICK은 응답을 생성하기 전, 발화의 종류를 정하는데, 다음과 같은 식을 활용하여 3가지로 분류한다:

(위에서 h_ct를 BERT로부터 나온 context embedding으로 정의했다.)

대답 생성기의 분명한 방법은 item과 발화 type을 input으로 응답 생성기에 넣는 것이다. 그러나 이것은 대화 문맥에서의 단어 생성을 방해한다는 단점이 있다.

이에 cross-attention mechanism을 사용하여 대화 맥락을 함축적으로 주입한다. 이후에는 맥락을 고려한 답변을 얻게 된다.

발화 type과 추천된 item이 token embedding으로 layer에 들어가면, 첫 decoder가 sel-attention을 수행한다.

이후, cross-attention mechanism과 h_ct를 활용하여 답변을 내게 강요한다.

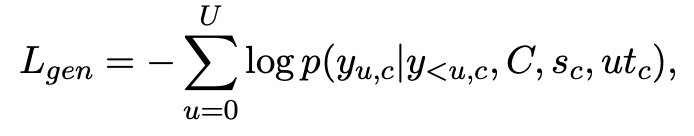

generation loss 식은 다음과 같다:

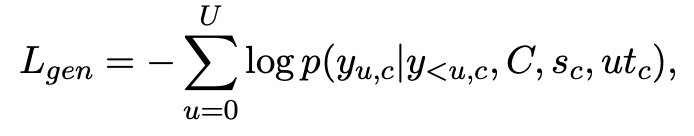

Experiment

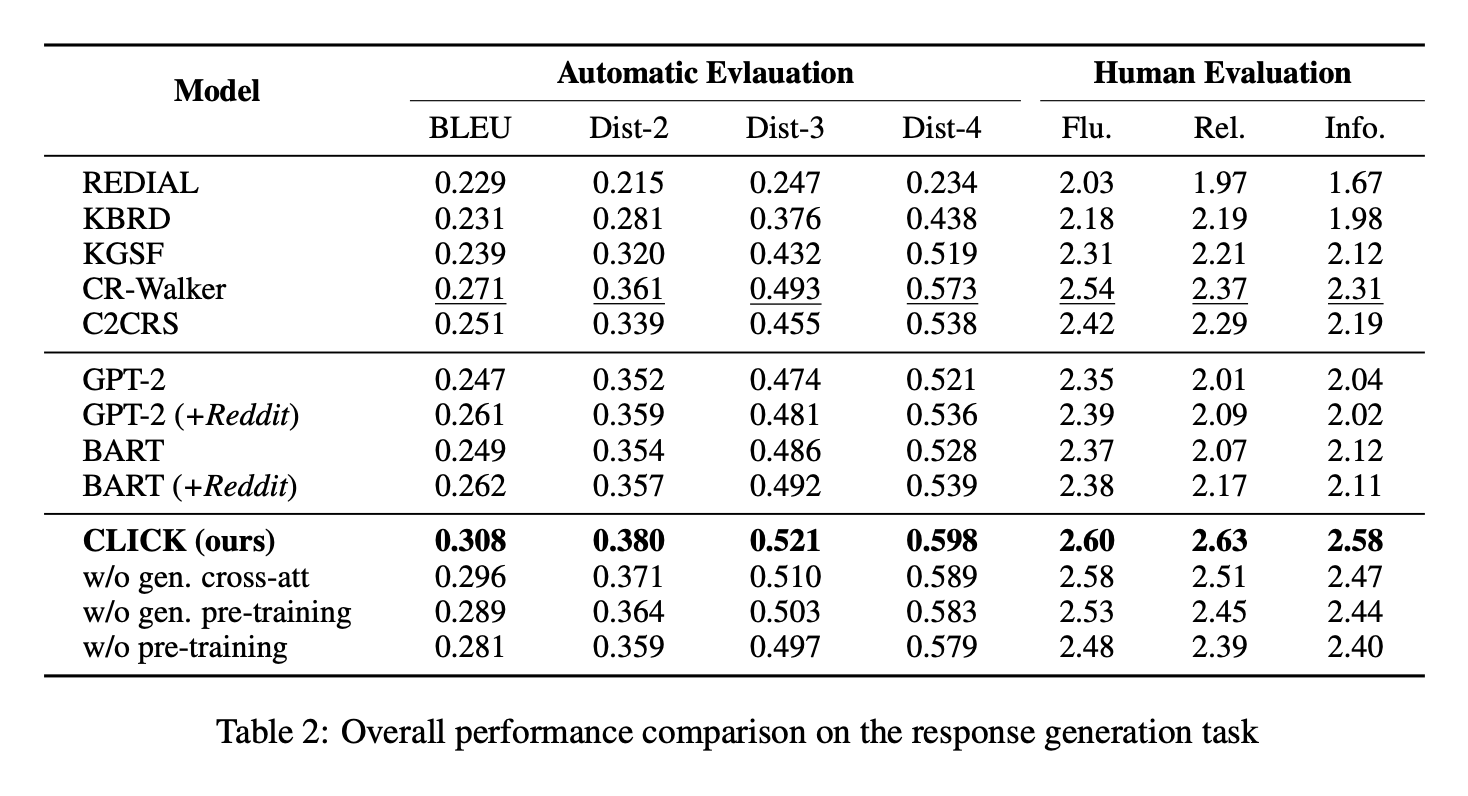

benchmark CRS 데이터셋인 REDIAL과 Reddit, DBpedia에서 추출된 KG로 실험했다. 실험 결과는 다음과 같았다.

Conclusions and Future Work

relevance-enhanced contrastive learning loss를 사용하는 CLICK은 대화에서 맥락적 지식을 파악하여 유저의 선호도를 정확하게 포착한다.

향후 연구에서는 수동적으로 대화에서 주제를 포착하는 것이 아닌, 능동적으로 유저에게 직접 자신의 선호도를 말하도록 활발한 질문을 하는 방법에 대해 연구한다고 한다.

'📚 논문' 카테고리의 다른 글

| Discovering New Intents with Deep Aligned Clustering (0) | 2023.08.16 |

|---|---|

| A Probabilistic Framework for Discovering New Intents (0) | 2023.07.27 |

| USTORY: Unsupervised Story Discovery from Continuous News Streams via Scalable Thematic Embedding (0) | 2023.07.11 |

| GPT-1: Improving Language Understanding by Generative Pre-Training (0) | 2023.06.20 |

| Attention is All You Need (2) | 2023.06.16 |

요즘 대화형 챗봇에 관심이 많다.

그런데 chatGPT와 대화를 해 보았을 때, 내가 좋아하는 것이 뭔지 딱 알려주지 않으면 제대로 파악 못하는 경우가 엄청 많다.

이에 대화만으로 나의 선호도를 파악하는 챗봇은 없을까? 관련해서 신박한 알고리즘은 없을까? 에 대해 생각해 보았고, 위 논문을 접하게 됐다. 자세히, 열심히 읽었으니, 읽은 흔적을 첨부해야겠다. (필기 많음 주의)

Abstract

현존하는 대화형 추천 시스템 (Conversational Recommender Systems. 줄여서 CRS라고 부르더라.)은 대화만으로 전체적인 유저 선호도를 알지 못한다. 선호하는 item이 대화에서 나오지 않는다면, 유저 선호도를 분석하는 것에 있어서 어려움이 있는 것이다.

이에 해당 논문은 CLICK을 제안한다. CLICK는 대화 문맥 내에서 '사용자가 선호하는 item'이 등장하지 않았더라도, 대화만을 통해 context-level의 유저 선호도를 파악하는 것이다. 게다가 relevance-enhanced contrastive learning loss를 고안하여 사용자에게 디테일하고 세밀하게 추천한다. (relevance-enhanced contrastive learning loss를 직역해 보니, '특정 요소나 특징을 중요하게 여기는, 인스턴스 간의 상이성을 측정하는 함수' 이다.)

여기서 CLICK은 질문에 대해 댓글로 추천을 해주는 커뮤니티 Reddit의 data를 CRS task에 이용한다.

Introduction

예전 방식인, 클릭이나 구매 데이터로 사용자의 선호도를 파악하는 방식은 선호도의 동적 모델링이 불가하다. (정적으로 그때그때 사용자의 취향을 조사해야 함.) 해당 문제를 CRS가 잡아준다. 아래 그림을 보고 챗봇을 사용해 본 경험을 떠올리면 금방 이해된다.

그러나 생각을 해보면, 유저가 '나는 사과를 좋아해'처럼 대화에서 자신이 좋아하는 item을 노출하는 경우도 있지만, 위 대화와 같이 노출하지 않는 경우도 상당히 많다. 해당 상황에서 사람의 경우에는 계속 대화해 보며 상대방이 어떤 것을 좋아하는지 예상, 거의 확신을 할 수 있는 반면, 챗봇은 알아차리기 어렵다. 이에 CLICK은 문맥에서 선호하는 item을 유추하며, 해당 문제점을 해결하고, 나아가 사용자에게 세밀한 추천을 제공한다.

과연 어떻게 하는 것일까?

우선 CLICK은 knowledge graph(줄여서 KG)와 Reddit data를 이용하여 대화로부터 context-level의 선호도를 알아낸다. 그러나 여기서도 문제가 있다. 바로 데이터들의 modality(양식)이 다르다는 문제이다. 이는 추천된 아이템과 물어보는 text를 짝지어 나열하고, 조금 특별한 contrastive learning을 진행하여 해결한다.

본래 contrastive learning이라고 하면, positive와 negative으로만 나뉘는데, 여기서는 positive끼리의 상대적 연관성을 구한다. 이를 위해 사용되는 것이 relevance-enhanced contrastive learning loss function이다. (해당 함수에 대해서는 뒤에서 더 자세하게 설명된다.) 결과적으로 이것을 사용하면, entity와 context-level의 관점 둘다로부터 추출된 정확한 추천을 제공한다.

Related Work

1. CRS (Conversational Recommender Systems)

앞서 설명했듯이, CRS는 정적인 interaction history보다 동적인 interaction을 더 많이 한다. 이러한 CSR은 다음 2가지로 분류될 수 있다:

- Template-based CRS

- slot-filling method (빈칸에 들어갈 말 찾는 방식)를 사용한다.

- 유연하지 못한 대답이 생성된다는 문제점이 있다.

- Natural language-based CRS

- 유저가 free-text로 요구할 수 있다.

- 외부 데이터로부터 어떻게 정보를 얻어내고, 사용자 선호도를 알아내기 위해 해당 정보를 어떻게 사용할지에 대해 집중한다.

- 외부 데이터가 많아도 free text로부터 유저의 니즈를 포착하는 능력이 부족하다. (KG에 있는 item 항목들만 사용하기 때문)

- 이를 해결하기 위해 contrastive learning approach를 사용함으로써 언급된 item과 text 둘다로부터 유저 선호도를 조사 가능하게 만든 것이 CLICK이다.

2. Contrastive Learning

self-supervised laerning에서 많이 활용되는 방식이다.

특히 multi-modal에서 많이 활용되는데, feature representation을 정제하는 것에 있어서 positive 끼리는 더 짧은 거리를 negative 끼리는 더 먼 거리를 갖게 하는 방식이다.

그러나, CLICK에서는 relevance-enhanced contrastive learning loss를 고안하여 각각 다른 상대적인 유사성이 있는 다중 추천 아이템을 고려한다.

Pre-training for Contextual Knowledge Injection

KG는 다음과 같이 정의된다: G = {(e1,r,e2)|e1,e2 ∈ E,r ∈ R}

Reddit data는 다음과 같이 표시된다: D = {(q, s, v, t)}

- q는 질문자의 질문

- s는 추출된 아이템

- v는 item에 대한 연관성 score (Reddit에서의 추천 수)

- (선택) t는 item 추천에 대한 이유

pre-training 단계에서 KG는 KG-encoder에, Reddit data는 text-encoder에 넣고, (위에서 설명했던) relevance-enhanced contrastive learning loss를 사용해서 train 시킨다. 이후, 응답 생성기는 필요에 의거하여 추천을 위한 응답을 내놓는다. 다음 그림을 보면 더 이해가 잘 될 것이다.

1. Contextual Knowledge Injection

다음은 encoding 되는 과정을 설명한다. (위 그림을 보면서 이해하면 더 쉽다)

- s가 KG-Encoder를 거쳐 item embedding인 n_s로 변환된다.

- q를 BERT와 Fully Connected Layer (FCN)에 통과시켜 인코딩 시키고, 그것을 h_q라고 부른다.

hq = FCN(BERT(q))

- 이후 서로 다른 modality를 극복하기 위해 relevance-enhanced contrastive learning loss를 통해 두 modality 간의 일치를 촉진한다.

위 그림에서 v_(q, s)는 reddit에서 공감 수, S는 positive set, S'는 negative set을 나타낸다.

sim은 코사인 유사도이다.

전에 설명했던 loss function을 통해 v_(q, s)와의 positive pair는 끌어당겨지고, negative pair는 멀어진다.

이는 pre-trained encoder가 user의 발화로부터 포괄적인 선호를 알 수 있게 한다.

2. Learning to Explain Recommendation

다음은 response, 응답을 만드는 과정이다.

기본적으로 필요한 항목들은 다음과 같다:

- 추천 item

- rationale (이유)

- GPT-2

GPT-2에 item s와 utterance(발화) type을 token embedding layer에 넣고, self-attention(A1) 시킨다.

이후, BERT만 거친 context embedding h_ct와 다음 decoder block에 cross-attention(A2)을 수행한다.

이는 item suggestion 이유를 생성하기 위함이다.

A2 = FFN(MultiHead(O_A1,h_ct,h_ct))

여기서 O_A1은 A1의 output layer이다.

Fine-tuning for Conversational Recommendation Task

여기서는 CLICK의 CRS task 수행을 위한 fine-tuning 전략이 소개된다. 우선 그림은 다음과 같다:

CLICK은 주요 2개의 컴포넌트로 구성되는데, 하나는 대화로부터 유저의 니즈를 알아내는 것이고, 하나는 포착된 유저 선호도로부터 설득적인 말을 생성하는 것이다. CLICK의 목표는 올바른 item s_c를 추천하고, system 응답 y_c를 생성하며, 대화 history C를 이해하는 것이다.

1. Contextual Knowledge-enhanced Recommendation

대화 history C가 text-encoder에 들어가면, context-level의 유저 선호도 p_cl을 얻게 된다.

pcl = FCN(BERT(C))

이후, entity-level의 유저 선호도를 알아내기 위해 p_cl과 단어 entity 사이에 cross-attention을 적용한다.

cross-attention score은 다음과 같다:

위에서 W_q, W_k는 weight, n_e는 제공된 entity로부터의 entity embedding이다.

이후, 다음 식을 통해 entity-level의 유저 선호도 p_el를 얻을 수 있다.

이렇게 얻은 p_el과 p_cl을 결합함으로써 최종 유저 선호도를 얻게 된다.

W는 weight, [;]는 concatenation 연산자이다.

2. Context-enhanced Response Generation

CLICK은 응답을 생성하기 전, 발화의 종류를 정하는데, 다음과 같은 식을 활용하여 3가지로 분류한다:

(위에서 h_ct를 BERT로부터 나온 context embedding으로 정의했다.)

대답 생성기의 분명한 방법은 item과 발화 type을 input으로 응답 생성기에 넣는 것이다. 그러나 이것은 대화 문맥에서의 단어 생성을 방해한다는 단점이 있다.

이에 cross-attention mechanism을 사용하여 대화 맥락을 함축적으로 주입한다. 이후에는 맥락을 고려한 답변을 얻게 된다.

발화 type과 추천된 item이 token embedding으로 layer에 들어가면, 첫 decoder가 sel-attention을 수행한다.

이후, cross-attention mechanism과 h_ct를 활용하여 답변을 내게 강요한다.

generation loss 식은 다음과 같다:

Experiment

benchmark CRS 데이터셋인 REDIAL과 Reddit, DBpedia에서 추출된 KG로 실험했다. 실험 결과는 다음과 같았다.

Conclusions and Future Work

relevance-enhanced contrastive learning loss를 사용하는 CLICK은 대화에서 맥락적 지식을 파악하여 유저의 선호도를 정확하게 포착한다.

향후 연구에서는 수동적으로 대화에서 주제를 포착하는 것이 아닌, 능동적으로 유저에게 직접 자신의 선호도를 말하도록 활발한 질문을 하는 방법에 대해 연구한다고 한다.

'📚 논문' 카테고리의 다른 글

| Discovering New Intents with Deep Aligned Clustering (0) | 2023.08.16 |

|---|---|

| A Probabilistic Framework for Discovering New Intents (0) | 2023.07.27 |

| USTORY: Unsupervised Story Discovery from Continuous News Streams via Scalable Thematic Embedding (0) | 2023.07.11 |

| GPT-1: Improving Language Understanding by Generative Pre-Training (0) | 2023.06.20 |

| Attention is All You Need (2) | 2023.06.16 |