Abstract

Natural language에는 unlabeled text의 데이터 수가 labeled text의 데이터 수보다 훨씬 많다. 해당 사실에 근거하여 OpenAI에서는 다양한 unlabeled text를 활용하여 모델을 generative 하게 pre-train 시킨 GPT 모델을 제시했다. 해당 모델은 이전 모델들보다 훨씬 뛰어난 성능을 보여 그 결과를 증명했다.

Introduction

unlabeled data로부터 word-level 이상의 정보를 끌어내는 것은 다음과 같은 두 가지의 이유로 어렵다:

- transfer에 유용한 text 표현을 배우는 것에 어떤한 형태의 최적화 목적 (optimation objectives)가 좋은지 모른다.

- 학습된 표현을 target task에 전달할 가장 좋은 방법이 무엇인지에 관한 의견 일치가 없다.

이런 불분명성이 NLP에 효과적인 semi-supervised-learning을 디벨롭하는 것에 어려움을 주었다.

해당 논문에서는 unsupervised pre-training과 supervised fine-tuning을 결합한 semi-supervised 접근을 시도한다.

이 논문의 목적은 적은 변화로 다양한 작업에 transfer 할 수 있는 보편적인 표현들을 학습하는 것이다. 이를 위해서는 대량의 corpus of unlabeled text가 필요하고, 목표 작업을 위한 labeled data가 필요하다. 이를 위해 두 가지 과정을 만들었다:

- Unlabeled data에 language modeling objective를 적용시켜 초기 파라미터를 학습하게 했다.

- 해당 파라미터들을 labeled data를 이용하여 target task에 fine-tuning시킨다.

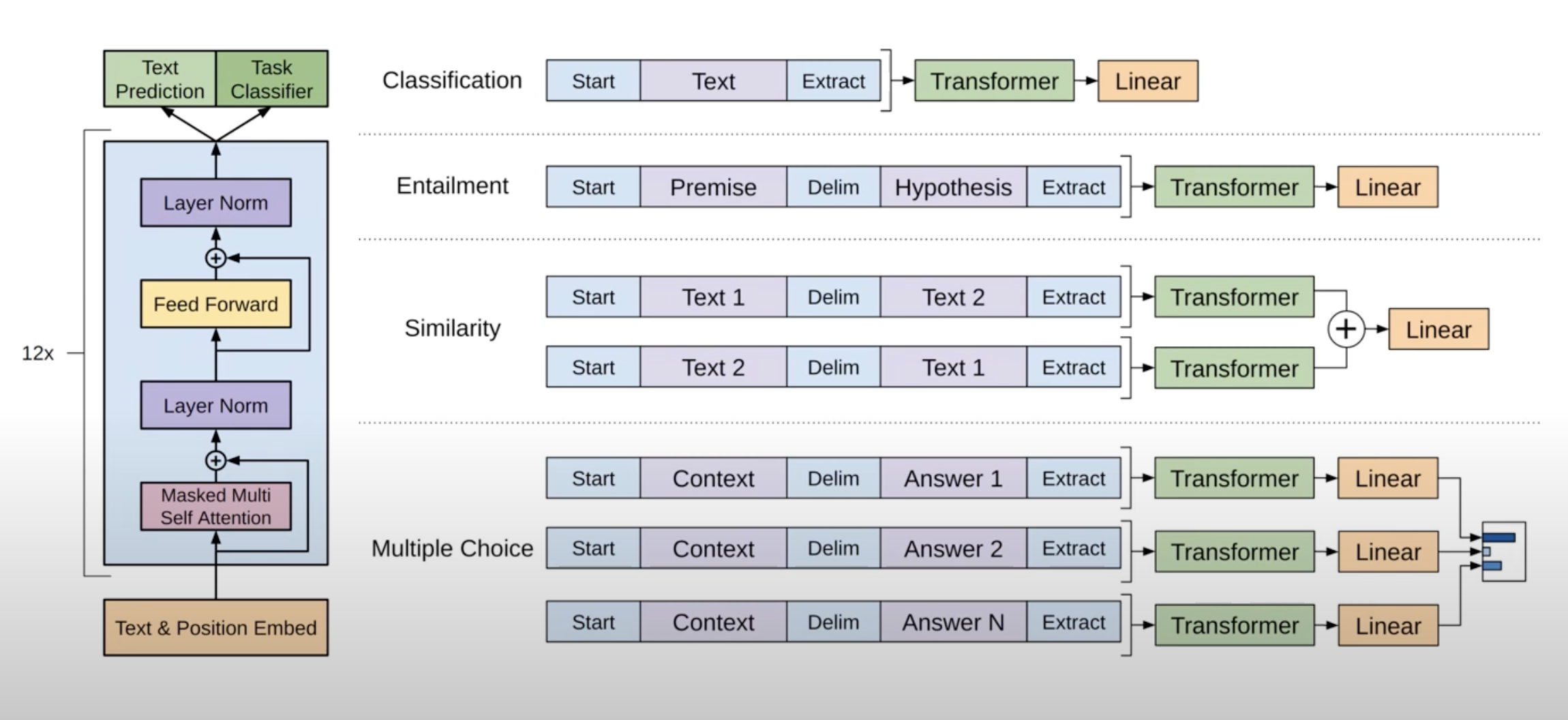

모델 아키텍처로는 transformer를 사용하는데, 이 모델은 long-term(긴) 데이터에 대해서도 RNN과 같은 이전 모델들보다 훨씬 robust(튼튼)한 결과물을 내놓는다. transfer시에는 traversal-style 접근 기반에서 사용된 task에 특정적인 input adaptation을 사용한다. 이는 task마다 요구하는 input text를 연속적인 싱글 시퀀스로 변환하는 것이다. 즉 task에 맞는 미세조정을 위해서 pre-trained 모델의 출력을 변형시킨 것이다. 이 때문에 pre-trained 모델의 출력만 바꿔도 다양한 작업에 미세조정이 가능하다.

Related Work

Semi-supervised learning for NLP : 초기 연구에서는 unlabeled data를 사용해서 word-level이나 phrase-level을 계산하고 이를 feature로 사용하여 지도학습에 적용시켰다. 지난 몇 년간의 연구들에서는 unlabeled corpora로 훈련된 단어 임베딩을 사용하여 다양한 작업에 성능적 발전을 가져다주었다. 그러나 이런 접근 방식들은 주로 단어 수준의 정보를 transfer 한다는 점에서 한계가 있으며, 본 논문은 이보다 더 높은 수준의 정보를 transfer 하고자 한다. 최근 연구들에서는 unlabeled data에서 단어 수준의 의미를 넘어서 더 고차원적인 문맥 수준(phrase-level)이나 문장 수준(sentence-level)의 임베딩을 시도하기 시작했다.

Unsupervised pre-training): 비지도 사전학습은 좋은 시작점(initialization point)을 찾는 것이 목표라는 점에서 준지도학습의 특별한 유형이다. 초기 연구들에서는 이를 이미지 분류와 회귀 문제에 사용했고, 이후 연구들에서는 사전학습이 우수한 정규화(regularization)로 작용한다는 것을 증명하였으며, 이는 DNN에서의 일반성을 높여주었다.

해당 논문과 비슷한 연구로는 언어 모델 objective를 사용하여 사전학습을 진행하고, 이를 목표 작업을 위해 미세조정하는 연구가 있었다. 그러나 이 연구에서는 사전학습을 할 때 언어적 정보를 얻기 위해 LSTM을 사용했는데, 이 때문에 짧은 범위의 데이터에서만 모델이 유효했다. 그러나 본 논문에서는 transformer를 사용하여 긴 범위의 데이터에서도 유효하도록 했다. 또한 더 큰 범위의 작업에서도 유용한데, 이는 자연어 추론, paraphrase 감지, 그리고 story completion를 포함한다. 또한 다른 연구들에서는 목표 작업을 위한 지도학습을 진행할 때 사전학습이나 기계 번역 모델에서 가져온 은닉 표현(hidden representation)을 보조 feature로써 활용하는데, 이는 각 작업을 위한 상당한 양의 새로운 파라미터를 요구한다. 이에 비해 본 논문의 모델은 transfer 시 모델 구조에 대해 최소한의 변화만을 요구한다.

Auxiliary training objectives: 보조 비지도 학습 목적함수를 추가하는 것은 준지도학습의 대안에 가깝다. 초기 연구에서는 POS tagging과 같은 보조 NLP 작업을 사용하여 sementic role labeling을 개선하였으며, 최근에는 보조 언어 모델링 목적함수를 target task의 목적함수에 사용하여 시퀀스 라벨링에서의 성능적 개선을 증명했다. 본 논문의 실험에서도 보조 목적함수를 사용하는데, 비지도 사전학습 자체가 이미 목표 작업과 연관된 언어적 정보를 학습한다.

- 단어 수준보다 더 고차원적인 수준의 정보를 transfer 하고자 한다(문맥 수준, 문장 수준 등)

- 비지도 사전학습의 목표는 좋은 시작점을 찾는 것이다.

- Transformer를 사용하여 긴 범위의 데이터에서도 유효하다.

- Transfer시 최소한의 변화만을 요구한다

Framework

위에서 말했듯이, GPT의 학습은 unsupervised pre-training과 supervised fine-tuning의 단계로 이루어진다.

1. Unsupervised pre-training

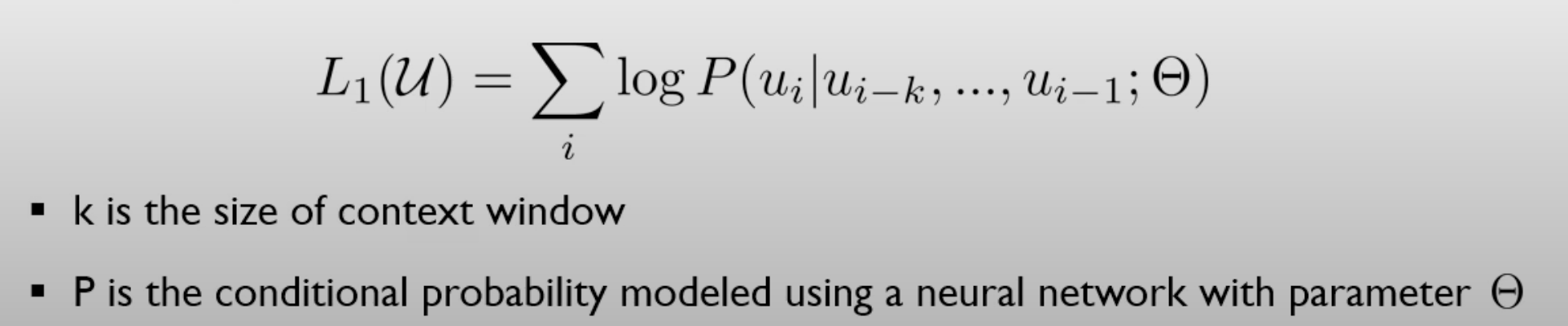

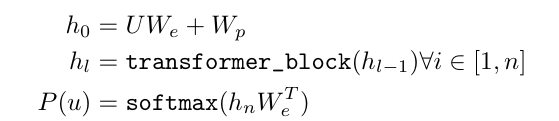

GPT는 주어진 embedding들에 대해 transformer의 decoding block들만 사용하게 되고, 그렇게 결과물을 예측한다.

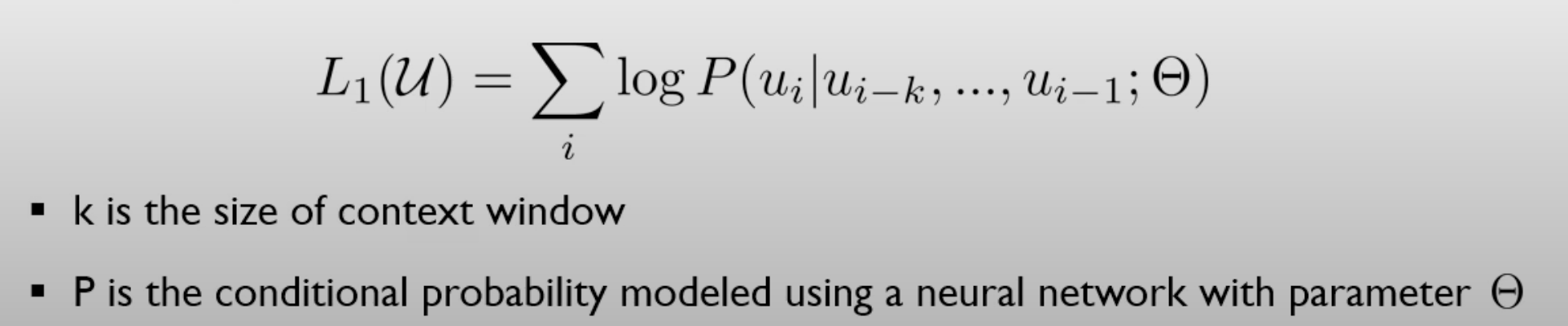

위 식에서 유추할 수 있듯이, 바로 전 단계에서 k번째 이전 단계까지의 token들을 살펴본 이후에, 그것을 바탕으로 i번째 단어가 무엇인지에 대한 likelihood를 최대화시키는 것이 unsupervied pre-training의 목적이다.

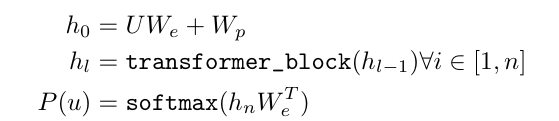

더 자세한 식으로 보자면,

위 식에서 각 변수들의 의미는 다음과 같다:

- U = (u_(-k),...., u_(-1)): token들의 context vector

- n: layer의 개수 (쌓아 올린 decoder block의 개수, 하이퍼 파라미터)

- We: token embedding matrix

- Wp: position embedding matrix

우선 토큰들의 context vector가 입력되고 token embedding, position embedding의 작업을 거쳐 h0가 생성된다.

이후, h_(l-1) 번째 항목을 transformer의 n번만큼 decoder 부분에 통과시키고, 최종적으로 feed forward network, softmax 함수를 거쳐 마지막 확률을 계산한다.

이때 중요한 점은 토큰을 processing 할 때, masked self-attention을 사용한다는 점이다.

(masked self-attention: 내가 processing 하고자 하는 token의 다음 sequence의 token들을 사용하지 않는다)

2. Supervised fine-tuning

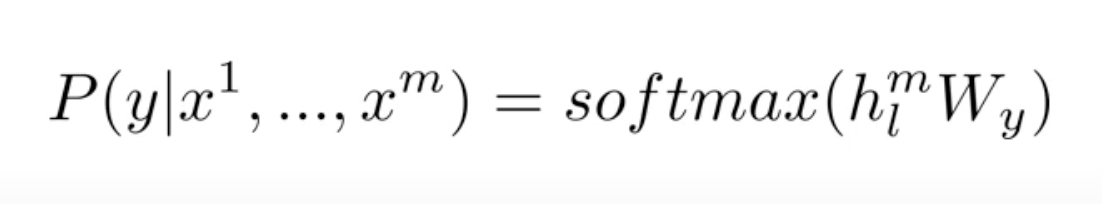

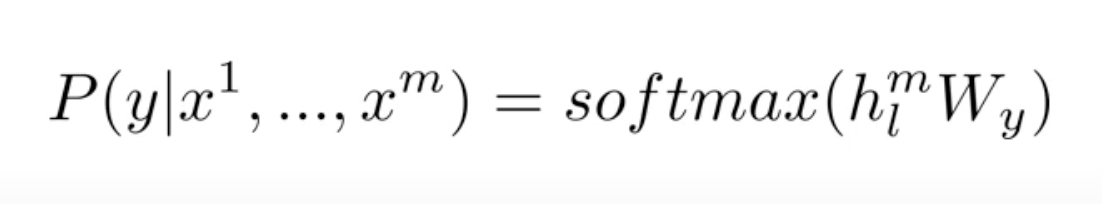

y라는 label이 주어진 x_1부터 x_m까지의 토큰들의 sequence가 input으로 들어오게 되면, 해당 input들은 pre-trained model에 들어가 final transformer block's activation h_l^m을 얻게 된다.

이후, h_l^m을 새로운 linear output layer에 넣어 예측한다.

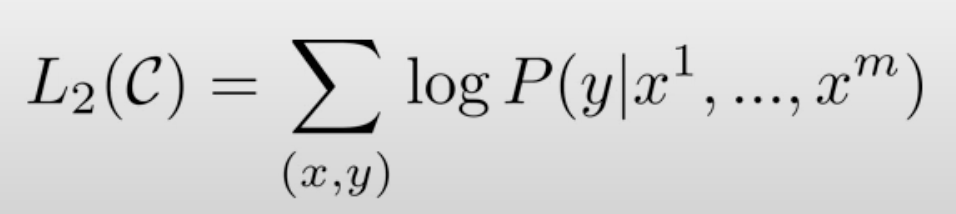

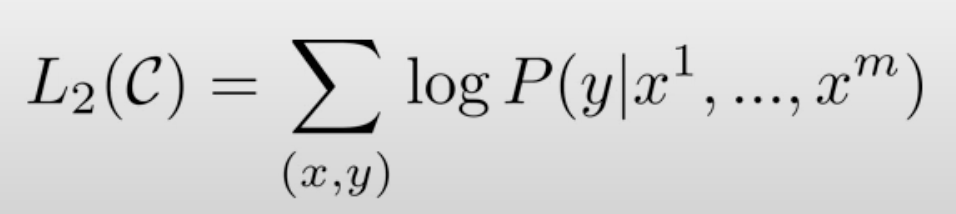

즉, GPT의 unsupervised hidden state인 x^m의 hidden state block을 가져다가 linear layer에 넣고, softmax 함수를 거쳐 최종 확률을 계산한다는 것이다. 해당 확률이 아래 그림의 L2가 된다.

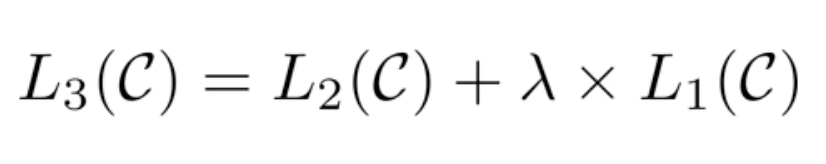

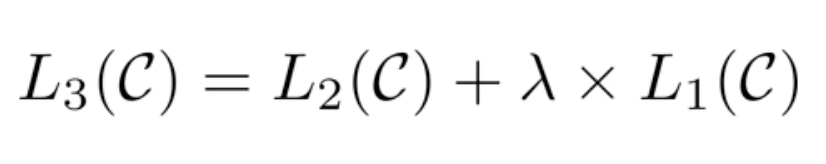

이후, 저자들은 위 단계들을 통해 더 효과적으로 학습하는 방법을 알아냈다.

1. L1(U)를 통해 language model을 pre-training 하고,

2. 이후 task-specific 한 dataset이 주어지면, 해당하는 dataset에 대한 language 모델의 fine-tuning과, supervised learning에 해당하는 목적 함수를 함께 결합하여 극대화하면 더 좋은 성능이 나타나는 것을 보였다.

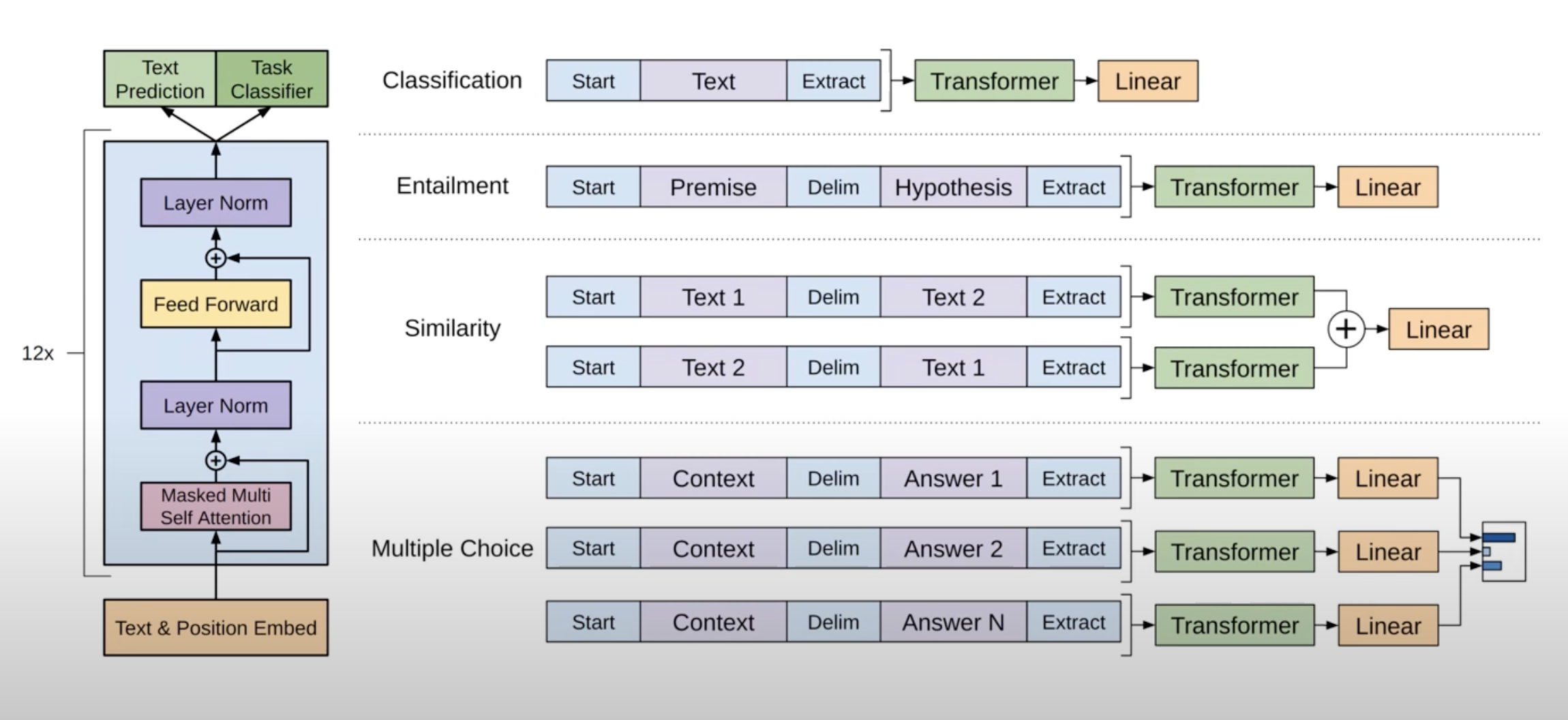

3. Task-specific input transformation

위와 같이 classification, entailment, similarity, multiple choice 등의 task가 있다.

각각의 task에 대하여 input을 위와 같이 다르게 만들면 훨씬 효과적이었음을 알 수 있다.

Experiments

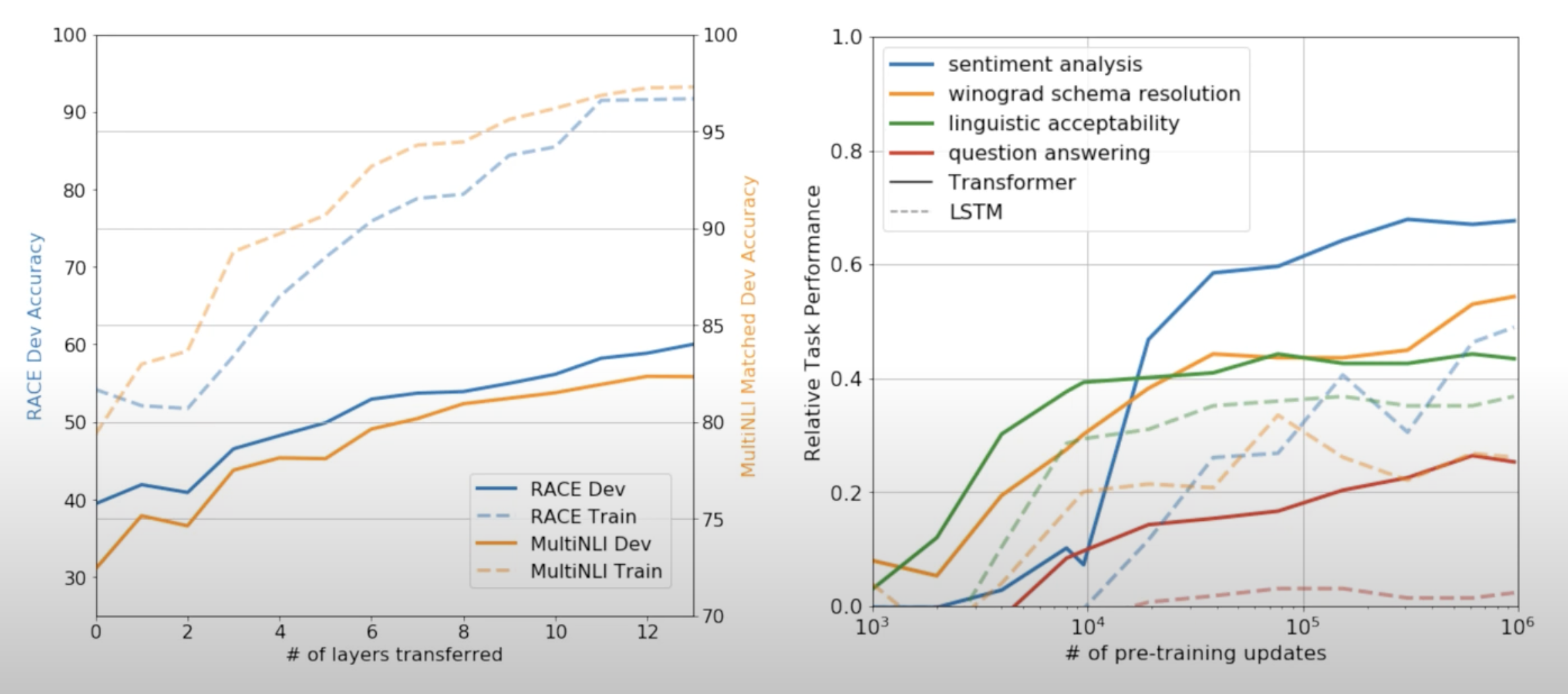

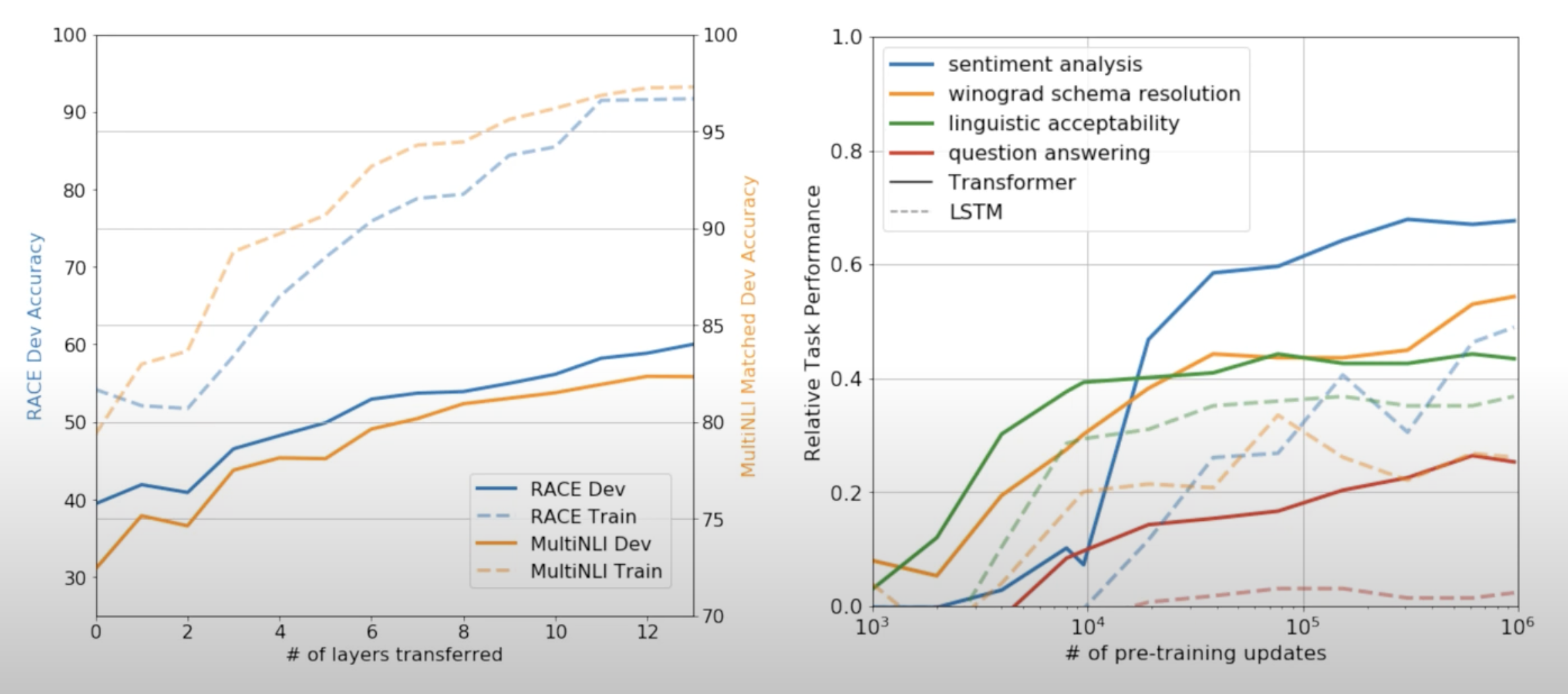

위 그림에서, 왼쪽에서 보듯이, decoding block을 쌓을수록 성능이 점점 좋아졌다. (논문에서는 최대 12개까지 쌓았다.)

오른쪽 그림에서 보듯이, fine tuning과 zero-shot 방법을 비교했을 때, fine-tuning을 진행하면 성능이 더 좋아짐을 알 수 있었다.

'📚 논문' 카테고리의 다른 글

Abstract

Natural language에는 unlabeled text의 데이터 수가 labeled text의 데이터 수보다 훨씬 많다. 해당 사실에 근거하여 OpenAI에서는 다양한 unlabeled text를 활용하여 모델을 generative 하게 pre-train 시킨 GPT 모델을 제시했다. 해당 모델은 이전 모델들보다 훨씬 뛰어난 성능을 보여 그 결과를 증명했다.

Introduction

unlabeled data로부터 word-level 이상의 정보를 끌어내는 것은 다음과 같은 두 가지의 이유로 어렵다:

- transfer에 유용한 text 표현을 배우는 것에 어떤한 형태의 최적화 목적 (optimation objectives)가 좋은지 모른다.

- 학습된 표현을 target task에 전달할 가장 좋은 방법이 무엇인지에 관한 의견 일치가 없다.

이런 불분명성이 NLP에 효과적인 semi-supervised-learning을 디벨롭하는 것에 어려움을 주었다.

해당 논문에서는 unsupervised pre-training과 supervised fine-tuning을 결합한 semi-supervised 접근을 시도한다.

이 논문의 목적은 적은 변화로 다양한 작업에 transfer 할 수 있는 보편적인 표현들을 학습하는 것이다. 이를 위해서는 대량의 corpus of unlabeled text가 필요하고, 목표 작업을 위한 labeled data가 필요하다. 이를 위해 두 가지 과정을 만들었다:

- Unlabeled data에 language modeling objective를 적용시켜 초기 파라미터를 학습하게 했다.

- 해당 파라미터들을 labeled data를 이용하여 target task에 fine-tuning시킨다.

모델 아키텍처로는 transformer를 사용하는데, 이 모델은 long-term(긴) 데이터에 대해서도 RNN과 같은 이전 모델들보다 훨씬 robust(튼튼)한 결과물을 내놓는다. transfer시에는 traversal-style 접근 기반에서 사용된 task에 특정적인 input adaptation을 사용한다. 이는 task마다 요구하는 input text를 연속적인 싱글 시퀀스로 변환하는 것이다. 즉 task에 맞는 미세조정을 위해서 pre-trained 모델의 출력을 변형시킨 것이다. 이 때문에 pre-trained 모델의 출력만 바꿔도 다양한 작업에 미세조정이 가능하다.

Related Work

Semi-supervised learning for NLP : 초기 연구에서는 unlabeled data를 사용해서 word-level이나 phrase-level을 계산하고 이를 feature로 사용하여 지도학습에 적용시켰다. 지난 몇 년간의 연구들에서는 unlabeled corpora로 훈련된 단어 임베딩을 사용하여 다양한 작업에 성능적 발전을 가져다주었다. 그러나 이런 접근 방식들은 주로 단어 수준의 정보를 transfer 한다는 점에서 한계가 있으며, 본 논문은 이보다 더 높은 수준의 정보를 transfer 하고자 한다. 최근 연구들에서는 unlabeled data에서 단어 수준의 의미를 넘어서 더 고차원적인 문맥 수준(phrase-level)이나 문장 수준(sentence-level)의 임베딩을 시도하기 시작했다.

Unsupervised pre-training): 비지도 사전학습은 좋은 시작점(initialization point)을 찾는 것이 목표라는 점에서 준지도학습의 특별한 유형이다. 초기 연구들에서는 이를 이미지 분류와 회귀 문제에 사용했고, 이후 연구들에서는 사전학습이 우수한 정규화(regularization)로 작용한다는 것을 증명하였으며, 이는 DNN에서의 일반성을 높여주었다.

해당 논문과 비슷한 연구로는 언어 모델 objective를 사용하여 사전학습을 진행하고, 이를 목표 작업을 위해 미세조정하는 연구가 있었다. 그러나 이 연구에서는 사전학습을 할 때 언어적 정보를 얻기 위해 LSTM을 사용했는데, 이 때문에 짧은 범위의 데이터에서만 모델이 유효했다. 그러나 본 논문에서는 transformer를 사용하여 긴 범위의 데이터에서도 유효하도록 했다. 또한 더 큰 범위의 작업에서도 유용한데, 이는 자연어 추론, paraphrase 감지, 그리고 story completion를 포함한다. 또한 다른 연구들에서는 목표 작업을 위한 지도학습을 진행할 때 사전학습이나 기계 번역 모델에서 가져온 은닉 표현(hidden representation)을 보조 feature로써 활용하는데, 이는 각 작업을 위한 상당한 양의 새로운 파라미터를 요구한다. 이에 비해 본 논문의 모델은 transfer 시 모델 구조에 대해 최소한의 변화만을 요구한다.

Auxiliary training objectives: 보조 비지도 학습 목적함수를 추가하는 것은 준지도학습의 대안에 가깝다. 초기 연구에서는 POS tagging과 같은 보조 NLP 작업을 사용하여 sementic role labeling을 개선하였으며, 최근에는 보조 언어 모델링 목적함수를 target task의 목적함수에 사용하여 시퀀스 라벨링에서의 성능적 개선을 증명했다. 본 논문의 실험에서도 보조 목적함수를 사용하는데, 비지도 사전학습 자체가 이미 목표 작업과 연관된 언어적 정보를 학습한다.

- 단어 수준보다 더 고차원적인 수준의 정보를 transfer 하고자 한다(문맥 수준, 문장 수준 등)

- 비지도 사전학습의 목표는 좋은 시작점을 찾는 것이다.

- Transformer를 사용하여 긴 범위의 데이터에서도 유효하다.

- Transfer시 최소한의 변화만을 요구한다

Framework

위에서 말했듯이, GPT의 학습은 unsupervised pre-training과 supervised fine-tuning의 단계로 이루어진다.

1. Unsupervised pre-training

GPT는 주어진 embedding들에 대해 transformer의 decoding block들만 사용하게 되고, 그렇게 결과물을 예측한다.

위 식에서 유추할 수 있듯이, 바로 전 단계에서 k번째 이전 단계까지의 token들을 살펴본 이후에, 그것을 바탕으로 i번째 단어가 무엇인지에 대한 likelihood를 최대화시키는 것이 unsupervied pre-training의 목적이다.

더 자세한 식으로 보자면,

위 식에서 각 변수들의 의미는 다음과 같다:

- U = (u_(-k),...., u_(-1)): token들의 context vector

- n: layer의 개수 (쌓아 올린 decoder block의 개수, 하이퍼 파라미터)

- We: token embedding matrix

- Wp: position embedding matrix

우선 토큰들의 context vector가 입력되고 token embedding, position embedding의 작업을 거쳐 h0가 생성된다.

이후, h_(l-1) 번째 항목을 transformer의 n번만큼 decoder 부분에 통과시키고, 최종적으로 feed forward network, softmax 함수를 거쳐 마지막 확률을 계산한다.

이때 중요한 점은 토큰을 processing 할 때, masked self-attention을 사용한다는 점이다.

(masked self-attention: 내가 processing 하고자 하는 token의 다음 sequence의 token들을 사용하지 않는다)

2. Supervised fine-tuning

y라는 label이 주어진 x_1부터 x_m까지의 토큰들의 sequence가 input으로 들어오게 되면, 해당 input들은 pre-trained model에 들어가 final transformer block's activation h_l^m을 얻게 된다.

이후, h_l^m을 새로운 linear output layer에 넣어 예측한다.

즉, GPT의 unsupervised hidden state인 x^m의 hidden state block을 가져다가 linear layer에 넣고, softmax 함수를 거쳐 최종 확률을 계산한다는 것이다. 해당 확률이 아래 그림의 L2가 된다.

이후, 저자들은 위 단계들을 통해 더 효과적으로 학습하는 방법을 알아냈다.

1. L1(U)를 통해 language model을 pre-training 하고,

2. 이후 task-specific 한 dataset이 주어지면, 해당하는 dataset에 대한 language 모델의 fine-tuning과, supervised learning에 해당하는 목적 함수를 함께 결합하여 극대화하면 더 좋은 성능이 나타나는 것을 보였다.

3. Task-specific input transformation

위와 같이 classification, entailment, similarity, multiple choice 등의 task가 있다.

각각의 task에 대하여 input을 위와 같이 다르게 만들면 훨씬 효과적이었음을 알 수 있다.

Experiments

위 그림에서, 왼쪽에서 보듯이, decoding block을 쌓을수록 성능이 점점 좋아졌다. (논문에서는 최대 12개까지 쌓았다.)

오른쪽 그림에서 보듯이, fine tuning과 zero-shot 방법을 비교했을 때, fine-tuning을 진행하면 성능이 더 좋아짐을 알 수 있었다.